CTS 2333 (Unix/Linux

Networking) Lecture Notes

By Wayne Pollock

Lecture 1 — Course, Career

Overview

Welcome! Introduce course.

Discuss SA job titles (show booklet), salary (starting w/BS

degree: ~$60k), politics (soft skills). Discuss HCC major

(program codes), certificates, and degrees.

Discuss Project 1 (mention no LVM),

install Linux. Pass out Fedora DVDs (CDs available from DistroWatch.com). Discuss system

journal — Use wiki.

Have students use wiki to pick host

names and user IDs (need to be unique in class). Mention post install

tasks (on web).

Networking Career guidance

We call you a networking

professional but you may not be treated like a professional. Other titles

include network engineer and network administrator. There are

various levels: apprentice/novice (work under close supervision

on limited tasks including help desk), journeyman (not usually

called that), do a lot of diverse activities under loose directions from senior,

who will often plan, design, make policy, and help troubleshoot the really

tough ones. In a smaller company even inexperienced network professionals may

do many different tasks, including installing, configuration, monitoring, and

troubleshooting servers and workstations for many different platforms. You

will need to understand design issues (say to place a new server) and protocols

(to configure and troubleshoot), and to understand your users.

There are specializations

available: WAN, LAN, routing and switching, security (hot field right now),

voice/data networks, remote access and mobile computing, and in-depth knowledge

of specific vendor networking products from Microsoft, Novel, Unix/Linux,

Cisco, and others.

Never look in a newspaper

for ads, look on-line: HotJobs.com, Monster.com, Dice.com, ComputerJobs.com,

BrainBuzz.com, FloridaJobLink.com, ITcareers.com, ...

Soft Skills (See Soft Skills web resource)

Customer relations (listen,

respond positively by guiding them away from bad ideas. Must have good oral

and written skills. Must be dependable, able to work well in a team.

Leadership is important for non-entry level positions.

Understand business/management

procedures, politics, and concerns. (ITIL).

Get networked: join a

professional society or two: Network Professional Association www.npa.org, ACM

acm.org, IEEE/CS computer.org, etc. Go to meetings (make contacts or network).

(Cheap student chapters, many benefits.)

Get certified:

Unix/Linux (LPI, Novell, Sun, ...), Cisco, CompTIA, and MS.

Basic Network Utilities to Know

There are many command line

tools that are commonly used to access resources across a network. Additional

configuration and troubleshooting tools are discussed later. A few you should

know about include:

ftp File Transfer

Protocol/Program. One of the earliest tools, it has a strange protocol

(requires a call-back on a different port from the server) and has many

security problems. Only anonymous FTP should be used anymore (username is

anonymous, password is a valid email address).

tftp Trivial FTP. The

only common use it to load configuration data into switches, routers, and other

network devices. Modern ones should support a more secure protocol.

telnet (also rlogin and rsh) Old, insecure protocol for remote login sessions.

(Use control-] to suspend session and bring up telnet>

prompt.)

ssh (Secure SHell)

Used to create a secure tunnel over which remote login sessions can occur.

However the tunnel can be used for other protocols such as scp and sftp, which

are used today instead of ftp. (FTPS is also used, but that doesn’t use ssh.)

wget (and curl) A zillion options, can

fetch any file for any FTP or HTTP[S] URL. Using wget -S --spider URL won’t fetch the document but shows the response

headers, useful for troubleshooting. curl

supports more protocols include LDAP, Telnet, etc.

nc (Called netcat on some systems.) Use like

cat only across a network.

links A (console) mode web

browser. Renders HTML pages as well as possible using only text. Can be used

to extract text from web pages.

Lecture

2 — Networking Standards and Standards Organizations

Networking

Standards

There are thousands of standards

relating to networking! These are far too many to publish in any one reference

book, and in any case the standards are changing and growing all the time. So

you must locate the standards when you have networking questions! These are

generally available on-line but some organizations sell copies of standards,

for up to thousands of dollars a copy. (PDFs of these can often be purchased

for around $20.)

A system admin must be aware

of where to locate networking information, which organizations are responsible

for networking standards and services, what legal and administrative

requirements must be met by your network and servers.

RFCs

There are thousands of Requests

for Comments. Despite the name, these are the official Internet

standards. Not all RFCs pertain to Internet protocols and not all networking

standards are published as RFCs (IEEE, ISO, ITU, W3C, and proprietary standards

generally are not). However most of what a SA or NA (Network Administrator)

needs to know is published as RFCs.

The Requests for Comments (RFC)

document series is a set of technical and organizational notes about the

Internet (originally the ARPANET), beginning in 1969. Memos in the RFC series

discuss many aspects of computer networking, including protocols, procedures,

programs, and concepts, as well as meeting notes, opinions, and sometimes

humor. RFCs are generally ASCII text documents. The most recent ones can be

found at tools.ietf.org/html.

You can use a nicer search form at www.rfc-editor.org/rfcsearch.html.

The official specification documents of

the Internet Protocol suite that are defined by the Internet Engineering Task

Force (IETF) and the Internet Engineering Steering Group (IESG ) are recorded

and published as standards track RFCs. As a result, the RFC

publication process plays an important role in the Internet standards process.

RFCs must first be published as Internet

Drafts. RFC 2026 describes The Internet Standards Process.

The RFC Editor is the

publisher of the RFCs and is responsible for the final editorial review of the

documents. The RFC Editor also maintains a master file of RFCs called the “RFC

Index”, which can be searched online. For nearly 30 years, the RFC

Editor was the legendary Jon Postel; today the RFC Editor is a small

group funded by the Internet Society.

Text file format (must use CRLF): See rfc-editor.org/EOLstory.txt.

Each RFC has a category

or status designation. The possible categories are:

·

PROPOSED STANDARD, DRAFT STANDARD, STANDARD

These are standards-track documents, official specifications of the

Internet protocol suite defined by the Internet Engineering Task Force (IETF)

and its steering group the IESG.

An RFC starts life as an Internet Draft. The Internet Draft is proposed

to the IETF, whereupon voting and modification occurs until it either becomes

obsolete due to lack of interest or is accepted by the IESG, whereupon it is

assigned an RFC number and published as a proposed standard RFC. If it

doesn’t become obsolete the RFC becomes a draft standard and eventually an

Internet standard, and is assigned a STD number (in addition to its RFC

number). The process by which an RFC is produced is described in detail in RFC

2026.

·

BEST CURRENT PRACTICE

These are official guidelines and recommendations, but not standards, from the

IETF. These RFCs are also assigned a BCP number.

·

INFORMATIONAL, EXPERIMENTAL

These non-standards documents may originate in the IETF or may be

independent submissions (e.g., Cisco, Novell, Microsoft, etc., may submit these

RFCs).

·

HISTORIC

These are former standards that have been actively deprecated.

Each RFC is numbered. For instance,

RFC 791 documents the IP protocol. Although most RFCs are generally are quite

readable, others are nearly impossible to read (written in “standardese”).

RFCs never change or get

updated (except for minor typo corrections). This avoids any hassle with

incompatible versions of standards. When necessary a new RFC is created that obsoletes

the original one. However, the RFC Editor does maintain errata for RFCs, and

RFCs obsoleted by a newer RFC sometimes list the new RFC number at the top (not

always). (Show RFC-822->2822->5322.)

STD and BCP numbers

also never change. However, when a STD or BCP is updated, it simply refers to

a newer RFC (or set of RFCs). For example, STD1 is currently defined by

RFC5000 since 2008. Before that, STD1 was RFC3700 (since 2004), and before

that, RFC3600, and so on. (I think the first version of STD 1 was defined by

RFC1038, in 1988.)

Further information on the RFC

documents and the IETF, the body that produces them, can be had at www.rfc-editor.org (demo),

the home of the RFC Editor. RFCs can be found in several other repositories

(mirrors), including www.ietf.org/rfc/rfc#.txt

, rfc.net , and

elsewhere. Some of these have converted the text documents to HTML.

Standards

Bodies and Organizations

An alphabet soup of groups oversee the

Internet: IANA, ICANN, IETF, IAB, ISOC, IESG, and the W3C. The Internet

Society (ISOC) charters the Internet Architecture Board

(IAB), the Internet Engineering Task Force (IETF), the Internet

Engineering Steering Group (IESG), and the Internet Assigned

Numbers Authority (IANA). Other groups also are responsible for

networking standards: ISO, IEEE, ITU, OASIS, and no doubt others I’ve

forgotten!

The IANA is the clearinghouse

and distribution point for network parameters (such as IP addresses, protocol

and port numbers, AS numbers, and TLDs). The actual definition of these is up

to the IETF, organized into a number of different working groups. These groups

are under the oversight of the IESG. The IESG in turn is under the oversight

of the IAB, which also adjudicates any disputes between these groups.

The IAB is chartered both as a

committee of the Internet Engineering Task Force (IETF) and as an advisory body

of the Internet Society (ISOC). Its responsibilities include architectural

oversight of IETF activities, Internet Standards Process oversight and appeal,

and the appointment of the RFC Editor.

The IAB was also responsible for the

management of the IETF protocol parameter registries. Previously, there was no

commercial involvement and all management decisions were up to the

IETF/IAB/ISOC. With the growth and worldwide acceptance of the importance of

the Internet, many countries and companies felt that the non-profit management

of the Internet by the ISOC, which had no legal standing by any country, was no

longer appropriate.

The IETF used a single company, Network

Solutions, Inc. as the sole registrar for DNS domain names. Many

companies wanted to make a profit from selling DNS services and Network

Solutions had a monopoly. Additionally most DNS root servers (like

Network Solutions) are operated by U.S. corporations, giving the U.S.

government a lot of say about what TLDs would be allowed.

Parts of Internet management (domain

name services) have become commercialized. To oversee the commercial DNS

service providers (“DNS registrars”), a number of countries then

built the Internet Corporation For Assigned Names and Numbers

(ICANN), which “usurps” much (but not all) of the management tasks from the

IETF.

The ICANN accredits companies and

organizations for domain name registration. They appoint an authoritative

registrar for each TLD. This registrar maintains the database for that

domain, including tracking which registrar has registered which domain names.

For example, VeriSign (which bought out Network Solutions) is the authoritative

registrar for .com and many

other TLDs. (See www.internic.net/regist.html

or ICANN’s website to find a list of DNS registrars for some TLD such as “.com”, and then visit those organizations’

websites for info.) This database is called the whois database.

It is distributed; each registrar maintains the records for the domains it has

registered.

ICANN has its own committees, or as

they call them, support organizations (or SOs). There is the address

SO, aso.icann.org,

and the generic and country code TLD SOs, gnso.icann.org and ccnso.icann.org.

These SOs advise ICANN much like the working groups supported the IETF.

Currently, ICANN still uses the IANA to

manage all the assignments.

ICANN has no oversight from the IAB or

IESG, they only respond to the member countries (which never agree on anything,

so essentially ICANN has no oversight). However, the ISOC/IETF does get

to appoint two members to the ICANN board. This chaos, along with a lack of

coordination with the ISOC, has so far resulted in ICANN being largely

ineffectual, and a breakdown of Internet regulation.

The newness of ICANN

caused it to have an agreement (the “JPA”) with the U.S. Gov. to oversee ICANN

for its first few years. ICANN’s memorandum of understanding with the U.S.

Department of Commerce ended in 2006 but was at that time renewed, and ended in

Sept. 2009. The agreement gave the U.S. full control and veto over ICANN

rules. The JPA wasn’t renewed when it expired. However, the U.S. maintains a

permanent seat on the ICANN’s accountability panel.

Even after the JPA

expired, ICANN still has a separate contract to have the U.S. operate the IANA

(Internet Assigned Numbers Authority). So they would not be completely

free of U.S. oversight. This agreement expires in 2011, unless renewed.

Note the JPA expired

40 years to the day (10/1/1969) when the second computer was connected to

ARPAnet, the first network.

For example, today nearly any registrar

can be accredited to manage any TLD, and the rules for who can get a domain

name in various TLDs are largely ignored. ICANN has authorized many new TLDs,

but a (small) number of registrars have ignored them and created any TLD a

customer wishes. Since some DNS servers recognize only some TLDs, this causes

big problems for everyone.

Note that ICANN still uses the IANA

as a clearinghouse and distribution point for the TLDs. The IANA still

maintains the list of root servers.

Originally of course, all the DNS root

servers were operated by the U.S. government; IANA doesn’t set policy. After

ARPA and NSF stopped funding the Internet, in 1997 the NTIA (National

Telecommunications and Information Administration), an agency of the U.S.

Dept. of Commerce, took over. They paid for and managed the DNS root servers,

keeping control of the passwords needed to update that, and were the only body

that officially could instruct the IANA to add new (or remove) TLDs.

Even after ICANN was formed, the NTIA

retained control. In 2005, the U.S. announced they would not relinquish

control of the DNS root servers to the international community (probably some offshoot

organization of ICANN).

But in 3/2014, the U.S. DoC announced

it would turn over control to ICANN. (This might be a reaction to the Snowden

revealations.) Thereafter, ICANN would have the final say about DNS policy and

instruct the IANA. The DoC actually announced they want a new group formed

for oversite of ICANN first.

The U.S. announced it will transfer

governance of the Internet domain name system (DNS) to a multi-stakeholder

entity on Oct. 1, 2016.

National

Telecommunications and Information Administration (NTIA) administrator Lawrence

E. Strickling on Tuesday said his agency notified the Internet Corporation for

Assigned Names and Numbers (ICANN) that “barring any significant impediment,”

NTIA plans to permit its Internet Assigned Numbers Authority (IANA) contract

with ICANN to expire as scheduled.

ICANN announced in August 2016 that the

nonprofit public benefit corporation Public Technical Identifiers had

been incorporated in California to run IANA’s functions after the transition

was complete, which includes responsibility for the coordination of the DNS

root, Internet Protocol (IP) addressing, and other IP resources.

Actually, this is an

over-simplification of the real history. On Sept. 2 1969, SRI (Stanford Research Institute,

in Menlo Park, CA., and now an independent research institute, SRI Intl.)

attached the second computer to ARPAnet. UCLA was the first node. The

switches were built to ARPA’s specs by BBN. Charley Kline sent the first two

characters, “LO”, trying to type “LOGIN”. But the SRI computer crashed at the

“G”. Later it was claimed he was trying to send “Lo and Behold!”; the restored

lab at Boelter Hall UCLA, now a museum, has that phrase spelled in ASCII, in a

tile mosaic.

SRI was in charge of

coordinating the users and researches using ARPAnet, and they established the Network

Information Center, or SRI-NIC for that purpose. When ARPAnet was renamed

to the DDN (defense department network), SRI-NIC became known as

DDN-NIC. This NIC kept all the documents (now called RFCs) as well as who was

assigned which network numbers (a primitive version of the WHOIS database,

originally kept on 3x5 inch index cards).

The NIC also managed

domain names. Owners of new hosts sent email to HOSTSMASTER@SRI-NIC.ARPA

to request an address. A file named HOSTS.TXT

was distributed by the NIC and manually installed on each host on the network

to provide a mapping between these names and their corresponding network

address. Once the DNS system was created, DDN-NIC also performed DNS root

nameserver administration.

In 1990, the IAB

proposed changes to the NIC/IANA arrangement. The Defense Information Systems

Agency (DISA) awarded the administration and maintenance of DDN-NIC, which had

been managed by SRI since 1972, to Government Systems, Inc. They in turn subcontracted

it to the small private-sector firm Network Solutions, Inc.

Two years after that,

the DoD decided not to continue funding the Internet, except for the management

of the .mil TLD. In 1993, the NSF

took over funding the Internet, and created the Internet Network Information

Center (InterNIC) to take over for DDN-NIC. They originally awarded the

contract to three organizations, but after a couple of years, Network Solutions

took over all the duties of running the InterNIC.

So officially, SRI

managed the Internet from its inception until the early-1990s. In 1998, both

IANA and InterNIC were placed under the control of ICANN, which then then privatized

the DNS system.

The U.S. kept a

controlling share of ICANN votes, and a veto, for the next ten years. ICANN

then began selling TLDs (names in the .xxx

TLD went on-sale in 2012) and registration services to almost any company with

sufficient cash. The DNS system may never recover.

The IEEE is the organization

responsible for popular Layer 2 standards: Ethernet, Wi-Fi, Li-Fi, etc. They

control MAC addressing.

The ITU is the descendant of

CCITT and controls telephony standards and radio frequency allocations, amongst

other tasks. The ITU is charted by the United Nations to coordinate global telecom

networks and services.

The ISO is responsible for a

variety of networking related standards, including some Layer 1 (physical

layer) standards, country codes (the two and three letter abbreviations used in

TLDs), etc.

The ISO is most famous for their OSI

seven layer model of networking. Note however the Internet is based on the

US-DoD four layer model of networking. (This is based on the original Arpanet

Reference Model (ARM), and some count the physical layer as a fifth layer.)

ISO is the International

Organization for Standardization. Because it would have different

acronyms in different languages (“IOS” in English, “OIN” in French for Organisation

Internationale de Normalisation), its founders decided to give it also a

short, all-purpose name. They chose “ISO”, derived from the Greek isos,

meaning “equal”. Whatever the country, whatever the language, the short form

of the organization’s name is always ISO.

The IANA is responsible for

protocol numbers, port numbers, and AS (Autonomous System, a.k.a. routing

domains) numbers for the IETF), maintaining the DNS root servers “hints”

file, and maintaining the official TLD DNS lists (for ICANN). You can find

anything they maintain from iana.org/protocols, such as IP

address assignments and a lot of reference information.

The World-Wide Web Consortium (W3C)

maintains many standards and protocols relating to Internet use. Examples

include HTML, CSS, SOAP, P3P, and many others.

OASIS (www.oasis-open.org) mainly deals with

e-business/e-commerce standards, such as UDDI (an XML Web services directory

lookup protocol), DocBook, OpenDocument (document standards), and WSDM-MUWS

(another web services protocol). Due to their restrictive licensing policies

some people don’t consider OASIS standards to be “open” and avoid using them.

Finally note that the presence of a

standard doesn’t mean everyone will use it! The advantage to using “open”

standards (where the specifications are widely available, and there is no

licensing fees or other restrictions on use) made the Internet possible.

SI Prefixes [Also discussed in Admin I]

All IT professionals should

know standard international (SI) units and prefixes. See wikipedia.org/wiki/International_System_of_Units

and physics.nist.gov/cuu/Units/prefixes.html.

kilo (1000 or 1024), mega, and giga.

For wavelengths and time you also should know: milli

(thousandth), micro (millionth), nano (billionth),

and pico (trillionth). Examples: KHz for kilohertz, msec or ms

for milliseconds, us or μs (Greek let mu + ‘s’) for microseconds, mb =

megabits, mbs = megabits per second. (Usually ‘b’ indicates bits and ‘B’

indicates bytes, but not always.) Technically SI units are multiples of 1,000

only. Rarely used are prefixes kibi, mibi, and gibi, for

multiples of 1,024.

France refuses to use

“bytes” but uses “octets”, so when we use kB/KB, mB/MB, and gB/GB they use

kO/KO, mO/MO, and gO/GO. (And sometimes kO, mO, and Go as well.)

Lecture

3 — Networking Concepts (part I)

What is a

network? Computers (a.k.a. hosts) able to share information and

resources. Physically this can be done via a cable (discuss crossover)

between the serial ports of two computers. (A computer attached to a network

is called a host. Any device attached to a network (a host, router,

switch, printer, ...) is called a node.

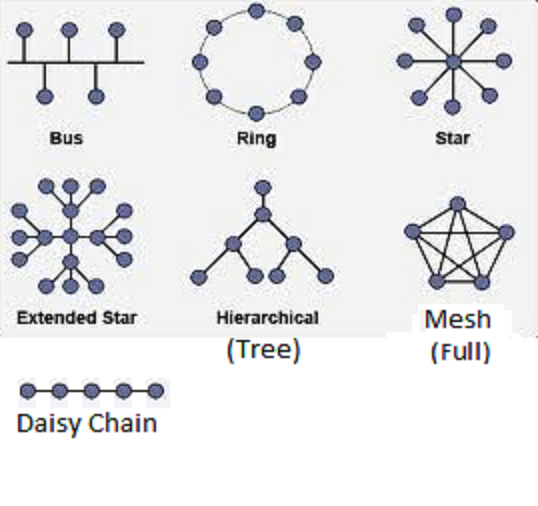

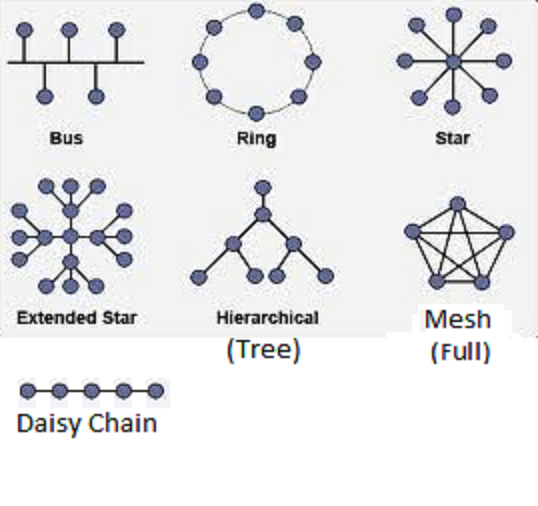

Qu: What is needed physically? Media

such as a cable between each pair of computers (called a mesh), or in a ring,

or in a bus, or in a star. What if you want to share with many

hosts (DTEC 4th floor has ~400 computers)? Connecting every computer to every

other with a cable takes a lot of serial ports and cables! (Known as a mesh

network.) More common approach is to have a single cable (a.k.a. trunk)

or hub (or switch) that all computers on the network share (known

as a bus network). Then each computer needs a unique address so

when one computer sends data to another, the intended recipient knows the data

was meant for it. The different network topologies are: mesh, partial mesh,

bus, star, ring.

Computer cables can be tricky to work with. They don’t work if

too long, and they work poorly if kinked, if not terminated correctly, or if

improperly grounded. When attaching a connector to the end of a cable, if you

straighten out 0.5in at the end more than you should, the 100Mb/sec cable will

only support about 30Mb/sec! Fiber optic cables can be trickier to work with.

Besides being more delicate then commonly supposed, standard

cables have safety issues. Such cables are clad in PVC, a strong, durable,

flexible, and cheap insulator. But in a fire the cables can get hot, and then

they give off deadly chlorine gas! In air spaces where people might be, you

need to use more expensive (but safer) plenum cable. There are

various building and safety codes to consider as well.

Fiber optic cables also have some safety issues: small glass

shards can get into your skin or eyes, and the invisible light coming from the

end of a fiber can permanently damage your eye in an instant.

In the end, you should consider using a licensed cable installer

(or get trained yourself).

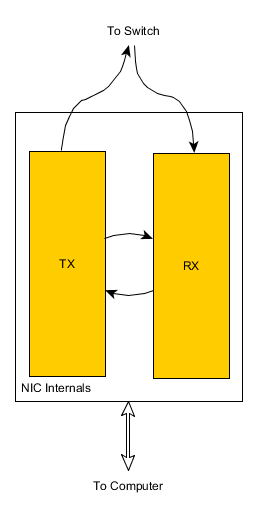

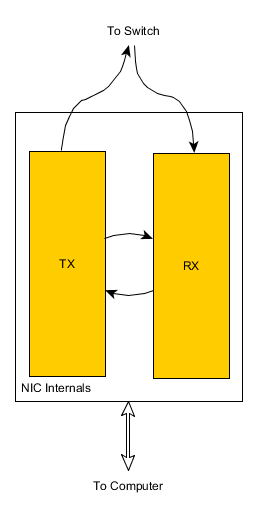

In addition to media, you need some

hardware to connect the host to the media. This is called a NIC

(Network Interface Card). In Linux these have names such as “eth#”, where “#”=0,1,2,..., or

they are named for the bus and position (e.g., “p7p1”).

In Solaris, they have strange names, such as “elx#”

depending on the manufacturer/chipset of the NIC, but many modern NICs are

simply known as “hme#”.

Your operating system needs the correct

driver software to allow applications to send messages to the NIC. Then some

application can send a message to another host by invoking the proper API

function. The data will be passed to the NIC and then sent on its way.

Addressing

Qu: (point to network diagram) If this

host wants to send a message to that host, how does it do that? Ans:

each computer needs a unique address so when one computer sends

data to another, the intended recipient knows the data was meant for it. The

other computers on the network are supposed to ignore the message. In the

early days the administrator you manually set each NIC with a unique number

between 1 and 255. Today NICs come configured with an address already, known

as the MAC Address (and BIA, data-link address, ...).

Protocols

A cable alone is not sufficient to

permit computers to share resources. Each node must know there is another on

the other end of the cable. Each must be able to talk to the other. What

happens if both send at same time? (Ans: collision.) What should

happen if neither host sends anything for a long time? Agreed upon protocols

(rules of communication) and standards (examples: type of cable, what

each wire will be used for, how many volts, ...) are needed to communicate.

Some software must be listing to the

port that implements the agreed protocols. (A set of protocols that work

together is referred to as a protocol stack.) Example: file

transfer.

A network then is two or more

computers physically connected and communicating using agreed upon protocols. Ethernet

and TCP/IP are very commonly used sets of protocols. Other

protocols: (LAN) IPX/SPX, SMB, (WAN) SLIP, HDLC, PPP, UUCP. A network may

be small (one geographical location) or may cover the globe. Different

technologies are used in each case. For a local area network (LAN)

Ethernet is almost always used, which broadcasts the transmitted

data so every station sees them. (Wireless technology, often called Wi-Fi,

is also used for LANs.)

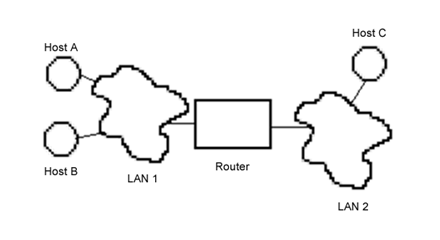

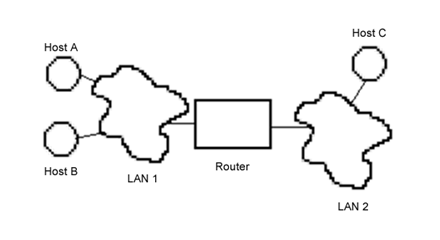

What if computers are far apart? LAN

technology won’t work! WANs (wide area networks) often use PPP

protocols. WANs usually connect LANs together, not individual computers,

through slower, error-prone serial links (point-to-point). (Draw

picture of packet hopping from LAN to LAN.) The different LANs composing a WAN

may use different protocols and technology. The LANs are often connected using

networking hardware called routers. Communications within a single

network are known as intranet, and between networks as internet.

“The” Internet is thus a WAN, connecting many company and

organization’s LANs together.

The WAN connecting

different sites is often leased from an ISP or telco. HCC for example uses

Level 3 Communications to connect the different campus (and the Lakeland backup

facility) to the data center in Ybor.

For host A to send data to host B,

host A must build a packet (a chunk of data) containing the data

plus a packet header which contains the destination address and

other information (e.g., packet length, type of data, ...). To transmit data

from one host/node to another, the application invokes API function, passing it

the address of the recipient and the data to send. API function builds the packet

from this info and sends the packet to the NIC. The NIC sends out the packet

onto the media, one bit at a time, according to the network protocol.

If the data is very large, the API

function will split it into several packets, which get reassembled at the

destination. (Example: FTP a large file; Ethernet max packet size is 1522

bytes including the header.)

Mostly, NICs will examine only

enough of the packet to see if it was intended for them or not. If not

they stop looking at it. The destination NIC will read in the whole packet,

compute a checksum, and compare it with the checksum at the end of the packet.

If they don’t match, the packet is corrupted and must be sent again.

The various protocols and their

associated configuration (along with other networking hardware such as routers

and switches) will be discussed in more detail later in this course. As a

system administrator, you will need to know the choices for networking

available to you, and how to configure your systems for various network design

choices. You will need to be able to build and configure simple, standard

networking, and to be able to communicate effectively with a network administrator.

Commonly

Provided Network Services

File/Application

- allows files and disk space to be shared.

Database –

server based database storage, retrieval, format, and security (LDAP)

Print - share

printers across a network. (IPP)

VOIP - Not

all networks carry TCP/IP data only. Voice and other data networks are also

common.

Remote Access

- (communications service) Allows remote users to communicate

with network hosts (typically just the servers), or enables communications from

the hosts on the network to remote hosts and networks (this is sometimes

considered an Internet service). Often this is done with a modem over an

ordinary phone line (or cable TV line). Microsoft uses the term RAS for

Remote Access Server, Novell uses NAS for Network Access Server.

Remote users must identify

themselves to the network before access is granted; this is known as logon

or logging in.

Commonly used remote access

client software (such as HyperTerm) uses protocols such as PPP, SSH, telnet,

and many others. (VPN, rsh: remote execution, PC Anywhere, Remote

Authentication Dial-In User Service (RADIUS), Terminal Access Controller,

Access Control System (TACACS+).)

Mail - Allows

the storage and transfer (forwarding) of email. Two popular mail

servers are Microsoft Exchange mail server, and Unix sendmail server.

Internet -

Allows your organization to use (or provide) some services from (to) servers

(users) on another network, connected by the Internet. Examples include file

transfer (FTP), world wide web (WWW or simple the web, HTTP), addressing

schemes and services, remote access to hosts on other networks, name services

(DNS), and more.

Naming - A

naming service provides a way to lookup addresses (and other data) using an

easy to remember name. The most common naming service is DNS.

Security -

firewalls, authentication, encryption, etc.

Management - This

includes many services such as network traffic monitoring (sniffing/snooping)

and control, load balancing, hardware monitoring (and automatic fault alerting,

e.g., signal a pager), fault isolation and diagnosis, asset management (i.e.,

hardware and software inventories), software license tracking, security audits

(and accounting), software updates (e.g., distribution of new software,

patches, configuration, and deletion of old software), address management

(DHCP), and backup and restore across a network.

Categorizing

Networks by type, size, ...

Networks can be characterized in many

ways, such as by size: Networks can be small (LANs) or

cover large distances (WANs). (MANs, CANs, PANs, and

IPNs: Metropolitan, Campus, Personal, and InterplaNETary.)

Networks may be either circuit

switched or packet switched.

Circuit switching

is how the old analog phone system

worked (start at 2-minute mark): As you dialed a digit of a phone

number, the local exchange had a rotary switch that would rotate around that

many contacts, then make an electrical connection. The next digit went to the

next rotary switch, and so on, until an electical circuit was established

between your phone and their phone.

Until you hang up the

phone, the wires involved in your circuit are used exclusively for that call.

There are only so many wires stretched between cities, and on Monday mornings, the

phone company can run out!

Packet switching

works without reserving any of the wires (so no circuit). The data to be sent

is digitized (if it wasn’t digital already) and split into small chunks called packets.

A “header” is added to the packet that contains, among other things, the

destination address for the packet. Each packet is sent out a NIC

independently, and travel over each wire between the sender and the destination

one hop at a time. The wire is not reserved for the duration of the session,

so it can be shared between many users.

Today most networks are packet

switched. These in turn are classified as either connection-oriented

or datagram (connectionless). (Web, FTP vs. DNS, NTP)

Packet switched networks can simulate circuits. This is known as virtual

circuit. (ATM networks use this.)

Networks can be connected in a variety

of ways. This is known as the physical topology of the network.

For example connecting all the computers in a LAN in a ring so that every host

connects with two other hosts, is called a token ring network

(Ex: ATM). Such rings can be connected together with special gateway

(connects two or more networks) hosts that are connected to two or more rings.

Other topologies include star, cell, and bus.

Another way to classify nets is peer-to-peer

vs. client-server.

Initially, most companies made

proprietary networking equipment that used proprietary protocols. Cisco became

huge by selling multi-protocol routers, ending vendor lock-in (except you were

then locked-into Cisco). Today Cisco remains the dominant networking

equipment supplier in the world. But there are others, such as Juniper and Garrettcom

(makes rugged network equipment for industrial settings).

Internet

Brief History [From: “BGP” by Iljitsch van Beijnum, (C)2002 O’Reilly]

The Internet started life as ARPANET

in 1969, funded by the US DoD. This was based in large part on revolutionary

work by Paul Baran at RAND corp., in 1960. His work included packet switching

and adaptive routing, amongst other ideas. Cold war fears required a robust

network that could work even if parts were knocked out. However, no such

network was built until years later.

ARPA (now called DARPA) needed a better

way to allow researchers to share results (and computing resources). It was

thought that researchers would use access to powerful computer centers to use

their special facilities, but the most common use was personal communications

(chatting and email, Netnews/Usenet), to share research.

The ARPANET initially connected just

four “nodes”, located at UCLA, the Stanford Research Institute, UC Santa

Barbara, and the University of Utah. By the end of 1971, there were fifteen

nodes. This network was primarily designed by Vinton “Vint” Cerf and Bob Kahn,

based on the earlier work of Paul Baran.

Initially these centers were reluctant

to connect to a network, but the ARPAnet design used separate mini-computers to

off-load all network functions. These were called IMPs (Interface

Message Processors), built by the engineering firm Bolt Beranek

and Newman (BBN). A site only needed a direct connection to the local

IMP. IMPs used the network control protocol (NCP) to communicate.

Later NCP was split into IP and TCP (and UDP).

Once ARPAnet was deemed a success,

other sites wanted connections. However, only a few sites were ever connected

directly to ARPAnet, the original Internet (the backbone of the

Internet).

To accommodate the others, NSFNET

was created and maintained by the NSF from the late 1980s until 1995. NSFNET

connected five supercomputer locations around the U.S. Note no commercial use

of the Internet was allowed on either network. (No ads!)

Internet

Exchanges

At that point, there were two separate

networks. To have traffic (data) to pass from one network to another requires

an Internet Exchange Point (IX or EP are common acronyms). The first

were built by the DoD; two 10-Mbps Ethernet switches, FIX-East and FIX-West.

Each IX connects traffic between cooperating networks. Other networks were

created too (e.g., Bitnet), and other IXes.

At an Internet

Exchange, the networks of Internet Service Providers, telecommunications

carriers, content providers, website hosting providers, and others, meet to

exchange IP traffic with one another. This exchanging of regional, national,

and/or international IP traffic is generally known as “peering”.

In general, parties

peer at an Internet exchange in order to decrease network costs, to improve network

performance, and to make their network more redundant. Accessing many networks

directly at the exchange (that otherwise would have taken several network

“hops” through other parties), improves network performance. Having many

routes at an exchange through which traffic can be sent, increases redundancy.

As of 2014, there are

about 460 Internet exchanges operating worldwide. (See this list of Euro

IX Peering arrangements.)

In 1995, NSFNET was de-commissioned to

allow commercial networks to grow. But to ensure connectivity the NSF awarded

contracts for four IXs called network access points (NAPs):

Pacific Bell NAP in San Jose, Ameritech NAP in Chicago, Sprint NAP in NJ, and

MAE East run by MCI WorldCom in VA (which was already operating an IX). (MAE =

Metropolitan Area Exchange. FIX=Federal IX, CIX=Commercial IX,

pronounced “kicks”.) After these six EPs, other networks and EPs were build

commercially.

Today, the Internet connects about

100,000 networks and millions of hosts (and 100s of millions of users). The

backbone is provided by many company networks (such as Sprint) who sell the

connections to ISPs, who sell connections to us. These major

network operators connect their networks at various IXs around the world, such

as PAIX (SwitchAndData.com), now Equinix. (Show IX

list at PCH.net.) Today many cities have IXs (MAEs) that are

run by either by a commercial company who sells access and other services, or

by a cooperative of those who use it (and pay for it).

An ISP needs office space plus a

connection to an IX (usually a cable run by a local Telco), plus peering

(where roughly equal-sized ISPs in the same geographic region transfer each

other’s data for free) and transit (where you pay for it)

agreements with the other network operators. To participate, you will need a

block of IP addresses (from ARIN, however many IXs will allocate addresses from

a block they use to aggregate routes for that region) and an ASN (Autonomous

System Number). IXs usually offer various services, such as co-location

of your router and theirs. (See www.peeringdb.com for

details on IXes and ISPs.)

There appear to be

two public IXes in Tampa: TampaIX (at the Franklin Exchange, 655 N Franklin

Street) and IXTampa (at eSolutions, 400 North Tampa Street). See this

list of IXes, maintained by Euro-IX.

Several groups oversee the Internet: IANA,

ICANN, IETF. Parts of the Internet (domain name services) are now commercialized.

(Visit their websites for info.)

“The Internet” is not

the only global internet available. GLORIAD (Global Ring Network for

Advanced Application Development, http://www.gloriad.org/) started as a

1997 NSF-funded project that created MIRNET, connecting scientists in the

United States and Russia. In 2004, it was expanded to China, Korea, Canada and

five Nordic countries. The cyber-network now reaches half the countries on the

planet and 10 million IP addresses for an estimated 30 million or more users.

A new exchange point in Alexandria, Egypt, allows ties throughout the Middle

East, Africa and Central Asia and the Caucasus regions. Among other uses, the

network is employed to remotely operate telescopes and microscopes. It’s

particularly useful for data-intensive visualizations. Researchers can carve

out portions of the network for specific, uninterrupted long-distance

collaborations that might include a lot of video conferencing and other

intensive data exchange.

The Taj

network, funded by the National Science Foundation, now connects the U.S. and

India, Singapore, Vietnam and Egypt to the larger GLORIAD global

infrastructure, and “dramatically improves existing U.S. network links with

China and the Nordic region”.

The Internet2 network

(internet2.edu)

is a next-generation Internet Protocol and optical network that delivers

production network services to meet the high-performance demands of research

and education, and provides a secure network testing and research environment.

It connects over 60,000 U.S. educational, research, government, and community

anchor institutions, from primary and secondary schools to community

colleges and universities, public libraries and museums to healthcare

organizations.

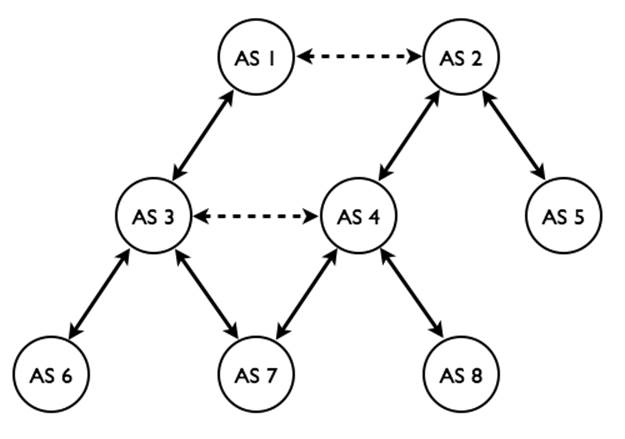

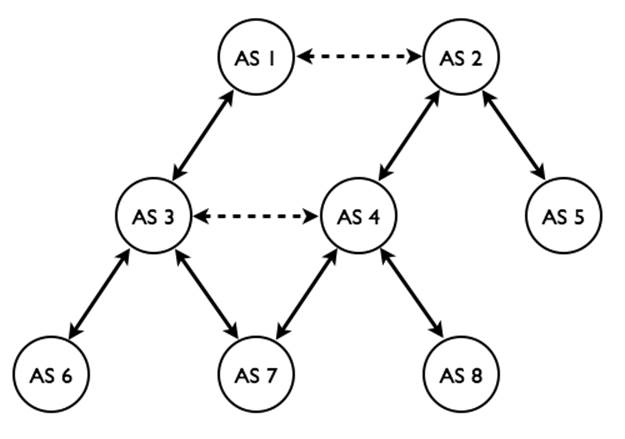

Autonomous

Systems (AS)

An AS is a

connected group of one or more IP prefixes (networks) run by one or more

network operators, and which has a single and clearly defined

routing policy. See BCP-6 (and RFC-1930).

Internet traffic (packets) are routed between ASs, which are seen as

block-boxes (single entities) for BGP routing purposes.

In 2008, the Internet

consisted of over 25,000 Autonomous Systems (AS). Each AS independently decides

whom to exchange traffic with on the Internet and it isn’t dependent upon a

third party for access. With requests for AS numbers mounting, the IETF changed

from 16-bit AS numbers to 32-bit ones (usually written as “x.y”). (See RFC-6793.)

Ranges of AS numbers (ASNs)

from 64,512–65,535 (16-bit) and 4,200,000,000 to 4,294,967,295 (32-bit) are

reserved for private use; see RFC-6996. You don’t have

to register these to use them, but they can’t be used for Internet routing.

An AS is often considered a

single administrative domain, but often customers share the AS of their ISP,

rather than define their own. Yet they are a different administration then the

ISP or other customers of that ISP.

If your network is

multi-homed (to two ISPs), you should participate in Internet routing, which

uses BGP and that in turn requires you to have an assigned ASN. (Some

routers, including Linux 2.6, can do multi-homing without using BGP; this is

described in the traffic management section below.)

You don’t request an AS num

from the IANA any more than you do IP addresses. Instead you use your RAR

(regional Internet Registrar), for North America that is ARIN (www.arin.net).

The cost is around $500 plus a small annual fee. See www.iana.org/assignments/as-numbers.

Internet

Service Providers (ISPs)

Early ISPs were companies that provided

a connection to the Internet for small businesses and users over dial-up phone

networks. (They had banks of modems connected to some computer, which in turn

connected to some bigger ISP, or directly to some IX.)

The largest ISPs are tier 1

(big) ISPs; they only peer with other tier-1 ISPs and never pay for transit

service (others pay them). (See below for definitions of peering and transit.)

These ISPs connect their networks at various IXs, and together their networks

form the Internet backbone. They are global ISPs. There are about 10 of these

(See Wikipedia “tier 1 network”):

AT&T, WorldCom, Sprint, Verizon Business (formerly UUNET), L3,

Global Crossing, Qwest, NTT Communications (Verio), Tata Communications

(formerly Teleglobe), SAVVIS, and TeliaSonera International Carrier. (Reliance

Globalcom (formerly FLAG) is one of the world’s largest telecom company, but

not technically a tier 1 ISP.)

Tier 2 (medium) ISPs have

networks of their own in a single geographic area (e.g., West coast of Florida)

but have not been able to convince any tier-1 ISPs to peer with them. So they

have to pay for transit service (plus connect their network to some tier-1 ISP

at some IX). However they usually have peering agreements with other tier-2

ISPs in the same geographical region, and in any case are generally

multi-homed. Verizon and road-runner might be considered tier-2 ISPs. Most

ISPs are tier 2. Some of the largest include PCCW Global and France Télécom.

Tier 3 (small) ISPs don’t

have any network of their own (just a single site LAN, with, say, dial-up banks

of modems). They pay tier-1 or tier-2 ISPs for transit service. However they

may have a peering agreement with other tier-3 (or rarely some tier-2) ISPs

that connect to the same IX. Many of these don’t multi-home.

USF and HCC connect

to two different ISPs, XXX and YYY. I think (but don’t know) that they used The

Franklin Exchange at 655 North Franklin Street, Tampa, and had a

peering agreement. One day XXX decided to not peer any more with YYY, but to

charge them for transit. When YYY didn’t pay immediately, the Internet routing

table for YYY was updated to not forward any traffic via XXX. But YYY’s router

still tried to peer the traffic (send it via XXX). The result was USF could send

traffic HCC, but HCC couldn’t send traffic to USF!

To be your own ISP

requires political connections to tier1/2 ISPs and other tier 3 ISPs in your

area. You will need to connect your network to some IX. Most ISPs provide

many services. ISPconfig.org

is a good open source ISP management package.

Modern ISPs have

evolved; there are few tier 3 ISPs left. Most have consolidated and offer

additional services, such as email services, or FTP access to a web server (so

you can upload content but not manage the server). As this sort of “second generation”

ISP became popular, more and more services were added, including hosting of

customers services. These are known as collocation facilities

(“colo’s”), or data centers. (These can be considered “third

generation” ISPs.)

Today, virtualization and cloud

computer clusters are becoming popular (the “fourth generation” ISP).

Demo Hurricane Electric’s BGP

toolkit site. (HE is an Internet Backbone, or Tier 1, provider,

also a colo.)

Content

Delivery Networks (CDNs)

Cybersecurity blog site KrebsOnSecurity

was hit on 2016/9 by a distributed denial of service (DDoS) attack having a

bandwidth between 620 and 665 Gbps — one of the largest such attacks to date.

Despite the attack, the website remained functional. How is that possible?

KrebsOnSecurity uses a content

delivery network, or CDN, to host its website. “A CDN is a

globally distributed network of proxy web servers deployed in multiple data

centers. The goal of a CDN is to serve content to end-users with high

availability and high performance.” (Wikipedia)

People pay CDN operators to deliver their content to their audience of

end-users. In turn, a CDN pays ISPs to host its servers in their data centers.

When a request is made for

some web server, the server is looked up in DNS. With a CDN, you change your

DNS records to point to an IP address provided by your CDN vendor. They in

turn return an IP address based on the geographic locations of the user and

current conditions, in order to provide the best response time. Many streaming

music and video sites use CDNs. In some cases, the ISPs do caching on their

own, to lessen the impact on their network.

To support HTTPS with CDNs,

you also need to either share their private keys with the CDN vendor (so they

can issue a proper certificate), or rely on a shared certificate issued by the

CDN vendor. In some cases, the delegation to the CDN doesn’t work well; research

is ongoing on improving this. Additionally, ISPs who cache content without

such certificates do not validate correctly (shows broken padlock icons). This

is a good thing, as the ISP in this case is essentially conducting a MitM (man

in the middle) attack, but it does break security for end users.

Besides better performance

and availability, CDNs also offload the traffic served directly from the

content provider’s web servers, resulting in possible cost savings for the

content provider. However, for dynamically generated content (or expired

cached content), the CDN still needs to contact the original website. In a few

cases, this can worsen the response time.

Although there are many

CDNs today, two of the largest are Akamai and CloudFlare. (KrebsOnSecurity

used Akamai.)

Peering

and Transit for ISPs

Adapted

from 9/2/08 article arstechnica.com/guides/other/peering-and-transit.ars

In order to make it from

one end of the world to another, the traffic will often be transferred through

direct or indirect interconnections to reach the end-user. The economic

arrangements that allow networks to interconnect directly and indirectly are

called peering and transit.

Peering: when two or

more autonomous networks interconnect directly with each other to exchange

traffic. This is often done without charging for the interconnection or the

traffic. An important limitation of peering is that it is open only to traffic

coming from a peer’s end-users or from networks that have bought transit. A

transit provider will not announce a route toward a network it peers with or

other networks it peers with or buys transit from.

Transit: when one

autonomous network agrees to carry the traffic that flows between another

autonomous network and all other networks. Since no network connects directly

to all other networks, a network that provides transit will deliver some of the

traffic indirectly via one or more other transit networks. A transit

provider’s routers will announce to other networks that they can carry traffic

to the network that has bought transit.

A transit provider receives

a “transit fee” for the service. This fee is based on a reservation made

up-front for the number of Mbps. Traffic from (upstream) and to (downstream)

your network is included in the transit fee. So if you buy 10 Mbps/month from

a transit provider you get 10 up and 10 down. The traffic can either be

limited to the amount reserved, or the price can be calculated afterward (often

leaving the top five percent out of the calculation to correct for

aberrations). Going over a reservation may lead to a penalty.

Every ISP will need to buy

some amount of transit to be able to interconnect with the entire world, and to

achieve resilience, an ISP will choose more than one transit provider. Transit

costs money, and as the ISP grows, its transit bill will grow, too. In order

to reduce its transit bill, the ISP will look for suitable networks to peer

with. When two networks determine that the costs of interconnecting directly

(peering) are lower than the costs of buying transit from each other, they’ll

have an economic incentive to peer.

Peering’s costs lie in the

switches and the lines necessary to connect the networks; after a peering has

been established, the marginal costs of sending one bit are zero. It then

becomes economically feasible to send as much traffic between the two network

peers as is technically possible, so when two networks interconnect at 1Gbps, they

will use the full 1Gbps. But with transit, even though it is technically

possible to interconnect at 1Gbps, if the transit-buying network has only

bought 100Mbps, it will be limited to that amount. Transit will remain as a

backup for when the peering connection gets disrupted. The money an ISP saves

by peering will go into expanding the business.

When a network

refuses to peer for another network, things can get ugly. Allegedly, a big

American software company was refused peering by one of the incumbent telco

networks in the north of Europe. The American firm reacted by finding the most

expensive transit route for that telco and then routing its traffic to/from

Europe over that link. Within a couple of months, the European CFO was asking

why the company was paying out so much for transit. Soon afterward, there was

a peering arrangement between the two networks.

Given the rules of peering,

we can examine how an ISP will behave when trying to build and grow its

network, customer base, revenues, and profits. To serve its customers, an ISP

needs its own network to which customers connect.

The costs of the ISP’s

network (lines, switches, depreciation, people, etc.) can be seen as fixed;

costs don’t increase when an extra bit is sent over the network compared to

when there is no traffic on the network. Traffic that stays on the ISP’s

network is the cheapest traffic for that ISP. In fact, it’s basically free.

Peering costs a bit more,

since the ISP will have to pay for a port and the line to connect to the other

network, but over an established peering connection there is no additional cost

for the traffic.

Transit traffic is the most

expensive. The ISP will have to estimate how much traffic it needs, and any

extra traffic will cost extra.

Typically, transit

providers charge a flat price per Mbps of connectivity. They charge for the

size of the pipe provided regardless of how far the traffic is going, or how

high transit demand is at that moment.

Transit providers are

starting (2011) to move away from constant price-per-Mbps (“blended rate”)

billing. Newer strategies bill by how far the data needs to be moved, and by

the level of demand. This is called tiered pricing. Most likely, tier

3 ISPs will need to recover the increased costs by charging customers more. (If

this appears to be a case of a small cartel fixing the price for a service they

have a monopoly on, you’re not alone.)

If the ISP is faced with

extra traffic (think large-scale P2P use), its first priority will be to keep

the traffic on its own network. If it can’t, it will then use peering, and as

a last resort it will pay for transit.

Routing and

Address Allocation [by Iljitsch van Beijnum, on Ars

Technica 8/29/11]

All communication going

across the network is put in packets, which are transmitted individually. This

has the advantage that there’s no call setup overhead, like in connection-based

networks (think landline phones). But the downside is that each of those

packets, holding not much more than one kilobyte of data, must be routed

through the network individually. So a big router that handles many millions

of packets per second has to take the destination Internet Protocol (IP)

address from each packet and then walk through its routing table to find where

next to send the packet. This makes the design of routing table data structures

and the algorithms to search through them an extremely critical part of the

Internet.

The design is so critical

that it’s necessary to limit the way in which addresses are given out so

routing tables remain small and efficient. Hence the original limitation that

IPv4 addresses could only be given out in class A, B, and C blocks, and the

current limitation that block sizes must be powers of two. This was a

traumatic change made in 1992-1993, allowing the Internet to survive a crisis

that could have killed it as routing tables were quickly outgrowing the

capacity of the day’s routers.

At around that same time,

the first three of the eventual five Regional Internet Registries (RIRs) were

formed, which took up the task of distributing IP addresses in North America,

Europe, and the Asia-Pacific, respectively. At this point, the policy “to each

according to his needs” was made explicit, and organizations requesting address

space had to sign a contract spelling out that “IP addresses aren’t property.”

The five RIRs are

·

APNIC (apnic.net)

Asia-Pacific region

·

ARIN (arin.net)

North and South America (and originally, sub-sahara Africa)

·

LANIC (lanic.net)

Latin (central) America and the Caribbean

·

RIPE (ripe.net)

Europe (and originally, parts of Asia and Africa)

·

AfriNIC (afrinic.net)

Africa

Lecture

4 — Networking Concepts (part II)

Network

Protocols

A protocol is

a set of rules used in communication, and usually that fulfill one or more

standards used in a networking model. Typically protocols define communication

with a peer layer. (That is, the software that implements TCP on

one host exchanges messages with the software that implements TCP on another.)

Sometimes a protocol suite

is referred to just as a protocol, and the various protocols in that suite are

then referred to as subprotocols. The most fundamental protocols

in a suite (and that other protocols rely on) are sometimes referred to as core

protocols.

There are a number of

popular protocol suites or stacks, including TCP/IP, IPX/SPX

(Novell, used to be very popular), NetBIOS (Microsoft P2P networks), and

AppleTalk (Macintosh networks.) The only one we need to learn in detail is

TCP/IP, the most popular today. (Later, TCP/IP will be covered in great

detail.)

Protocols that define and

transmit “L3” addresses (addresses that can be split into a network number and

host number, and so can be summarized by just the network number in internet

routing tables) are called routable protocols, because a router

can understand the addresses. Not all protocols are considered routable.

Ideally you would be

running a single protocol suite on an organization’s networks. But to support

legacy products, or when the network is upgraded, or when two companies merge,

you may end up running multiple protocol suites on the same multi-protocol

network. Today most PCs and routers can understand multiple protocols

if the right software is installed. Enabling a suite of protocols is referred

to as installing a protocol. Of course what really gets

installed is some software that implements a protocol. A multi-protocol router

can act as a gateway between the various protocols.

OSI

Reference Model (and DoD model)

At this point, the

standards and protocols for networking may seem simple or at least manageable,

but in practice the problem of correctly designing and implementing networking

is intricate almost beyond belief. At one time, you could only use a single

manufacture for all your networking devices and software, since there was no

hope of cross-platform compatibility. Even then, it was difficult for a single

manufacturer to get a suite of networking products to work together. For

example one manufacturer would have one device for tasks A and B, and a second

for C, D, and E, but a different manufacturer would have one device for A, B,

and C, and a second for D and E. It’s not surprising it was hard to mix

equipment or even software. Even the technical terms were confusing and used

differently by different vendors.

A vendor neutral reference

model allowed clear, precise definition of networking tasks and terms. A given

vendor could still make devices that implemented different parts of the model

but the task of comparing devices, communicating between users (and vendors),

and mixing different vendor’s products (that implemented the same parts of the

model) was now possible.

A reference model also

allows a way to break up the many parts of networking into smaller pieces, which

greatly simplifies the tasks of learning about networking. Understanding a

reference model can also be a great aid to troubleshooting networking problems.

One of the first network

reference models was developed by the US DoD for the forerunner of the Internet

(then called ARPAnet). It has 4 layers or parts (ref: RFC-1812#2.2.1,

STD-3):

Application, Transport, Internet, Link (or network access) (sometimes

the physical layer is included as a fifth layer.)

Much of current

Internet software reflects this four layer approach, where the application is

responsible for login (sessions), compression/conversion, etc.

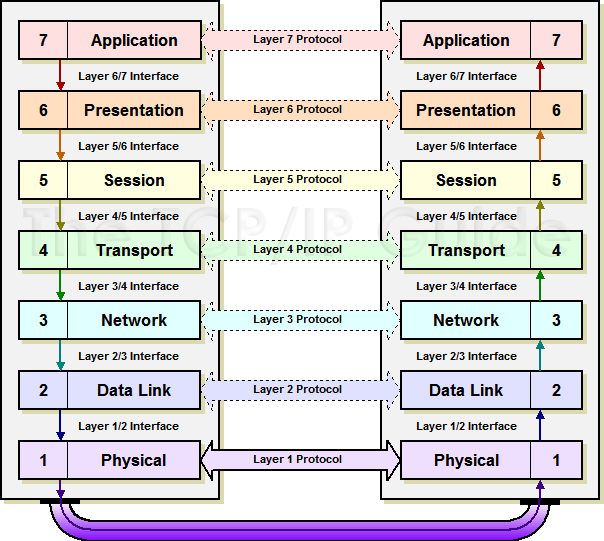

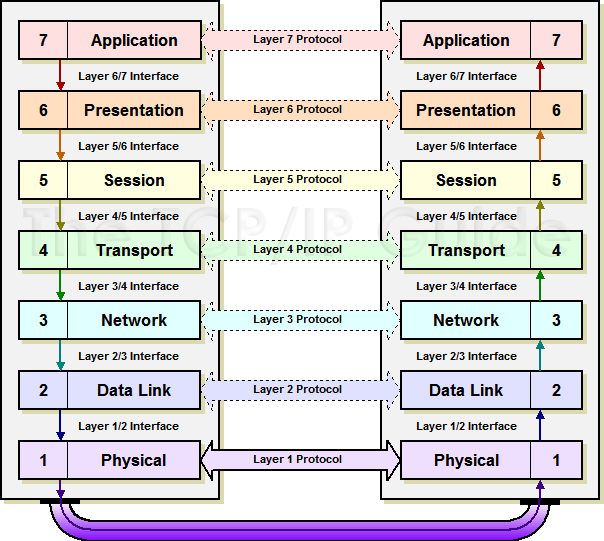

Meanwhile the ISO came up

with the OSI Reference Model, with seven layers:

Application, Presentation, Session, Transport, Network, Data Link, Physical

Phyllis Did Networking Till She

Passed Away

All People Seem To Need Data Processing

Later the Data Link layer was seen to be too complex

and was split into two sub-layers: Logical Link Control (LLC)

and Media Access Control (MAC). (In some ways, the LLC layer is

like the Transport layer and the MAC layer is like the network layer; both L3

and L4 are concerned with moving a packet from a host on one network to a host

on another, whereas both LLC and MAC are concerned with moving a packet from

host to host on a single network.)

|

|

OSI

Layer

|

Description and Functions

|

|

7

|

Application

|

Transfers information from program

to program. Everything at this layer is application-specific. This layer

provides application services (API) to applications for file

transfers, e-mail/messaging, and other network software services. Packets at

this layer are often referred to as messages. (ex: Telnet,

FTP, HTTP)

|

|

6

|

Presentation

|

Handles text format (e.g.,

Windows to Unix) and display code (ASCII to Unicode) conversions, encryption,

and compression.

|

|

5

|

Session

|

Establishes, maintains, and

coordinates communication between applications: login/authentication, session

timeouts, auto reconnection, synchronizing dialogs (transaction-ACK,

username-password, ...). (ex: connection to ISP, RPC, SQL,

NFS, ASP; most session handling occurs in application layer protocols.)

|

|

4

|

Transport

|

Ensures accurate delivery of data end to end (e.g., client on

one host to server on another), handles end-to-end flow control

and error recovery (via ACKs, sequence numbering, and retransmissions). Or

not. Sequencing also involves segmenting and reassembly. Name

service is logically at this level. Protocols: TCP (connection-oriented

services/protocols, segments), UDP (connectionless,

datagrams), DNS, LDAP, SPX

|

|

3

|

Network

|

Transmits packets host to host across an internet,

determines routes, handles the transfer of data (segmenting and

reassembling packets as needed), translate network addresses

(L3) into MAC (L2) addresses, gateway services. (Modern systems may also

handle some security at L3). Devices: routers, L3 switch.

Protocols: IP, ARP, RIP, IPX

|

|

2

|

Data Link

|

Encodes data, builds and

addresses frames, and transmits packets across a LAN. (ex:

IEEE 802.x, HDLC, ATM, PPP, FDDI, Frame Relay)

|

|

2 LLC

|

Logical Link Control

|

Controls frame synchronization,

flow control and error checking (ACK and re-transmission). Devices: Bridges,

switches. Protocol suites: Ethernet, Token-ring

|

|

2 MAC

|

Media Access Control

|

Controls how a host on the

network gains access to the data (is network busy now?), permission to

transmit it (includes addressing), logical topology. Devices: NICs

|

|

1

|

Physical

|

Manages hardware connections: NICs,

cables, repeaters, hubs. Data units are bits.

Topics addressed in this layer: connection type (point-to-point or

multipoint), physical topology, signaling (encoding), bandwidth use,

multiplexing. (ex: EIA/TIA-232 (formally RS-232), V.90, Ethernet)

|

In reality there

are 3 additional layers: layer 8 (User), 9 (financial) and

10 (political). Most problems occur at level 8, but the problems are

addressed by level 10 and end up limited by layer 9. – Limoncelli et al, 2nd

Ed. p. 189.

In practice, layers 1 and 2 are often considered

together. For example, a related set of L1 and L2 standards is Ethernet. L3

and L4 are also often considered together (and are often independent of the

standards used for L1 and L2). Layers 5, 6, and 7 are also often considered

together. (Compare OSI model with DoD model to explain why.)

Not shown is the queuing

layer. While not an official layer, most OSes support queuing operations

for traffic control and traffic shaping. While queues are used between all

layers to pass packets around, most Sys Admin queuing managment is done on the

queues between layers 2 and 3.

Note that many different

standards control the functions at each layer. For example, the IEEE 802

series of standards control the functions of the Data Link standards, EIA

standard for the physical layer, and so on.

Many networking products and protocols were invented

before (or if later, independently) of the ISO Ref. Model. To understand such

protocols you must be able to relate their standards to the model.

The services of most layers

are optional. Although L4 always includes multiplexing of data to different

flows on the same host (e.g., updating different web browser windows at the

same time), TCP provides connection-oriented services:

error recovery, flow control, and re-ordering of packets when they arrive out

of order. Essentially TCP provides a virtual circuit that is

setup, used, and torn down when finished. UDP provides datagram

service, which means each packet is sent with “best effort” delivery but

no connection-oriented services.

A model doesn’t enable

communications. It defines functional specifications for a group of protocols,

which implement the model. The protocols in turn are implemented by actual

software and hardware.

Qu: What is the difference

between L4 segmenting and L3 segmenting? Ans: At L4 an attempt is made to

segment (and sequence) packets so that no further segmenting (at lower layers)

is needed. Notice L4 is used only at each end, not in the middle of an

internet. Since this isn’t always possible (for one thing, different packets

may travel different paths through an internet as conditions change) segmenting

at L3 (and L2) may still be needed, although this results in inefficiencies.

Communications between Two Systems

Discuss how each layer communicates

with its peer layer on a different machine:

The packets from a higher layer are encapsulated

with headers (and trailers) that tell the peer about the packet

(IP headers say who sent the packet, where it is going, type of packet, length

of packet, checksum, etc.). The result is sent down to the software

implementing the next lower level. The entire packet received from above is

treated as data (headers and all) and the result is encapsulated again. Only

when the packet arrives at the physical layer is it converted to electrical

signals that are transmitted out the NIC and onto a cable.

(Show Diagram: |D| --> |HD| -->

|HDD| --> |HDDDT|)

Duplex

There are three types of

Point-to-Point communication methods: simplex (one-way, e.g. a

megaphone), half duplex (either way but only one direction at a

time, e.g., walkie-talkie), and full duplex (simultaneous two way,

e.g. a telephone). In reality, most full duplex channels are actually two half

duplex ones, considered as one. (Analogy with a four lane road: one-way

street, tollway ramps (one way during AM, the other during PM), and two-way

street (say, two lanes in each direction).

ISO versus TCP/IP Terminology

The ISO uses completely different

terminology than is common practice for TCP/IP literature. This is often very

confusing. Unfortunately there is little chance to adopt a standard

terminology for networking.

In the U.S.A you will likely only run

into ISO terminology when setting up routing that includes OSPF or IS-IS

protocols, or when setting up international WAN links. Be sure to have a

networking dictionary of terms bookmarked from the Internet before you run into

such situations!

Kernel Configuration for Networking

Networking is handled by the kernel.

Each protocol (suite) is usually handled by one or more kernel sub-systems

(typically present as modules), as are the physical network interfaces, the

logical network interfaces (loopback, PPP), and various network services such

as security, routing, traffic shaping, etc.

If the required sub-system (or module)

isn’t configured in the kernel then that network service won’t be available.

In the case of Linux you may need to rebuild the kernel to enable/disable some

network sub-systems. In other cases all sub-systems are present in the kernel

(possibly as LKMs) and you need to use various tools and edit various

configuration files to enable or disable these. (Chapter 5 of Hunt

explains what is needed for several OSes.)

Lecture

5 — Using Wireshark (formerly called Ethereal)

Wireshark

is a network protocol analyzer. Be careful of marketing hype!

If it isn’t called a protocol analyzer, it won’t be able to dissect the

packets for you. Wireshark has a GUI interface and is easy to use.

An older and related tool is called tcpdump.

Both tools can capture network traffic that passes by your computers NIC, and

either display it in real-time, or save the traffic in a file that can be

inspected later. The standard network traffic capture file format is pcap (also

called tcpdump format). (See man pcap(3).)

So you can capture with one tool and analyze with another. Both these tools

are available for all Unix, Linux, Mac, and Windows systems.

To capture traffic passing by a NIC

that isn’t addressed to that NIC’s MAC address requires placing the NIC in promiscuous

mode. If your NIC supports that, it usually requires root privilege to

do so. (On Linux, use “ip link set

dev eth0 promisc

on|off” or “ifconfig

eth0 [-]promisc”. Use “ip link

show dev eth0”

to see status; look for “PROMISC”.)

Wireshark uses the

utility dumpcap to capture

packets, and that program is executable by root

or group wireshark members only.

If you add your username as a member of the group wireshark, you won’t need to be root (usually; depending

on the security settings of your system, regular users may not be permitted to

switch NICs into promiscuous mode for example).

This works best with a hub. If using

switched Ethernet (everyone does today) remember that a switch acts like a

multi-port bridge. Once it learns the MAC address(es) reachable through

some port, it only forwards traffic for those destinations, rather than all

traffic. This means your NIC will only see packets addressed to it, or broadcast

packets.

Commercial grade switches (which

should be used in any organization, even a SOHO if security monitoring is

desired) include a SPAN (Switched Port ANalyzer, also

called a mirror or monitor) port that copies the data from the TX

line on other ports on the switch. (Some vendors call this a mirror port.)

You can often configure multiple SPAN ports, or per VLAN SPAN ports. However a

limitation of SPAN ports is that under heavy load some packets will not get

copied to them.

Taps (or Test Access Ports)

are special (expensive) devices that you can insert between a router and

firewall or a switch. These generally have 4 NICs on them: one to the router,

one to the firewall/switch, and two to the NMS. (Traffic on the TX line on

each of the first two NICs is duplicated, one to each of the last two NICs.

The NMS will usually have 3 NICS (one for remote access). The two NICs

connected to the tap should be bonded (IPMP) into a single virtual NIC,

which will capture all traffic. (A PC with appropriate hardware and 4 NICs can

be turned into a “home-made” tap.)

Even with a switch, so much traffic

passes by that most of the packets are unimportant and unrelated to whatever it

is you are checking. Protocol analyzers allow you to filter the

traffic saved, rather than saving all packets. The tcpdump man page gives a good explanation of the capture

filtering options available for both tcpdump

and wireshark (ethereal).

Other capture options are which

interface(s) to capture from, how long to capture, how many packets to capture,

and how much of each packet to capture. (Usually only the headers are useful

for debugging, but it is possible to capture all or part of the data.) To save

memory (and file space) it is possible to not save the Layer 2 framing (Ethernet)

data, which often is not useful for debugging).

(Qu: how many bytes are required to

capture all Ethernet, IP, and TCP/UDP headers? Ans: look in RFCs 791 (IP) and

793 (TCP, which is bigger than UDP). Ethernet frames vary in size depending on

type; the IEEE 802 standards can tell you the various sizes.)

Wireshark shows captured traffic in

three panes. In the top is a list of packets (the list pane),

one line of info for each.

The middle pane shows the details of

the various headers of a single packet selected from the list pane, and is

called the protocol pane: raw (physical layer), Ethernet (data

link layer), IP (network layer), TCP/UDP (transport layer), and some higher

layer protocol details (e.g., HTTP). Other protocols are shown as well such as

RIP, spanning-tree, ICMP, ARP, etc. This data shows in collapsible,

hierarchical way.

The bottom pane shows the raw data in

hexadecimal (hex) (the raw pane). As you click on various header

fields in the middle pane, the matching data shows in the bottom pane.

Wireshark allows you to set color