Modern RAM is dynamic RAM or DRAM. This is made of one

transistor + capacitor per bit. DRAM is cheap and dense, but loses the value

after a few milliseconds unless refreshed (read and re-written) before then.

Fast DRAM can have errors. Adding parity bits (ECC or error correcting code)

is used in servers used in data centers, when the cost is worth not having

errors.

Studies done in 2015 have shown DRAM errors are often

caused by one bad cell in a row or column of a memory block. Facebook and

others have added page retirement, which is the same as mapping out bad

blocks on a disk, but for RAM.

Static RAM or SRAM uses 6 transistors per bit and is nearly

twice as expensive as DRAM, but doesn’t need to be refreshed and so uses less

power and is around 6 times faster.

RAM is sold in modules called DIMMs

(dual inline memory modules) that plug into mating sockets on the

motherboard. This allows RAM to be upgraded or replaced as needed. The speed

of RAM is measured in terms of the original speed: DDR (double data rate), DDR2

(double DDR) and DDR3 (double DDR2). In 2012, the DDR4 standard was finalized;

it operates at a maximum of 1.2 volts (20 percent less voltage than DDR3), and

achieves data transfer rates of 3.2 billion transfers per second (double DDR3).

As of 2018, DDR5 is available and DDR6 has been announced.

RAM sold for use on servers often includes extra parity

bits per byte, so the RAM can detect any errors and, in some cases, correct

them. This “EC” RAM is a lot more expensive than commodity DRAM used in PCs.

RAM can have some performance issues

an SA should know about.

·

Most RAM is for dual channel use, which requires DIMMs to

be installed in identical pairs and in specific slots on the motherboard. This

doesn’t always happen at the factory, and then the BIOS will default to single

channel mode, halving the speed! You should visually inspect that the RAM

is installed correctly.

·

Another issue is that RAM reports to BIOS on POST its maximum

speed (using SPD or XMS method), but some BIOSes will ignore that and default

to some safe, slow speed! You can sometimes set BIOS parameters to override

that, to get the maximum speed you paid for.

·

Finally, the speed of the RAM and CPU is used by the BIOS to set

the bus speed. Cheaper RAM has fewer operating speeds (“modes”), and this

limits the CPU multiplier that can be used, thus slowing the whole bus. Suppose

you have a 2.4 GHz CPU. Using 667 MHz DDR2 RAM will end up setting the bus to

300 MHz (and a CPU multiplier of 8). Upgrading to 800 MHz DDR2 will result in

a bus speed of 400 MHz (and a 6x CPU multiplier).

This table from an Ars

Technica post shows the memory hierarchy:

Level Access time Typical

size

Registers "instantaneous" under

1KB

Level 1 Cache 1-3 ns 64KB

per core

Level 2 Cache 3-10 ns 256KB

per core

Level 3 Cache 10-20 ns 2-20 MB

per chip

Main Memory 30-60 ns 4-32 GB

per system

Hard Disk 3,000,000-10,000,000 ns over 1TB

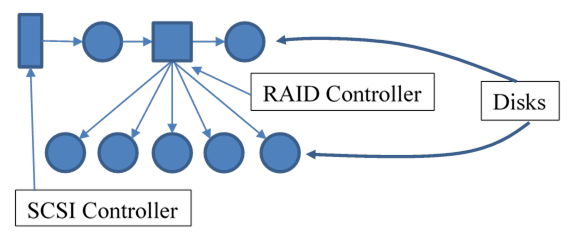

Devices Often

called peripherals, these include keyboard, video controllers,

ports, IDE/SCSI controllers, sound, clock, ...). Peripherals generally require

a controller; the device connects to the controller, which is installed in the

computer (using some add-on slot) and connected to the system bus.

Keyboard Input Keyboards

don’t (any longer) send ASCII when any (combination of) keys are struck. For

one thing, many keys have no ASCII (or even Unicode) equivalent (e.g., pgUp). Instead,

the keyboard sends numbers known as scancodes. These are only

roughly standardized. If you have an unusual keyboard you can use the setkeycodes(8) command to remap

some scancodes in the tty driver. (The first 88 scancodes cannot be

re-mapped.)

The kernel’s tty driver also contains a

table called the keymap (see keymaps(5))

that translates scancodes to standard key names called keycodes.

Software sees keycodes on key-pressed/released events. You can see scancodes

and keycodes in a non-GUI console window using showkey(1) command.

Many standard keymaps are available for

different keyboards (e.g., UK keyboards, German keyboards, etc.) The keymap

translates sequences of scancodes into keycodes. Use loadkeys(1) / dumpkeys(1) to change/view the

keymap table. (e.g., disable/move caps lock, define function and other extra

keys, etc.

The keyboard driver translates keycode

sequences into Unicode (e.g., ALT+,+c = ç) or some action (e.g., CTL+C sends a

signal), determines what strings to send when function keys are pressed, and

the effects of modifier (shift, alt, etc.) keys.

The readline(1)

library, used by shell, mysql, psql, ..., allows you to bind keycodes to

arbitrary meanings (e.g., symbols, Unicode, shell commands, etc.) with bind or ~/.inputrc. It is readline

that provides a history mechanism for these commands, and allows input editing.

Finally keep in mind this console

tty driver isn’t used for USB keyboards, remote SSH sessions, or GUI (X window)

sessions, so updating scancodes or keymaps has no effect in these cases.

(X has its own keyboard driver and you can remap its keycode table using xmodmap, discussed later.)

Solaris/Sparc systems use keyboards with an extra key,

“STOP”. During the boot process you take control by hitting keys such as

“STOP-A” (the STOP and the A key simultaneously).

Networking Hardware

Stuff you should know: NICs, static

(manually set) versus dynamic (automatically set) networking

information (parameters) including IP address, mask, default

gateway IP address (default route), DNS server(s) (up to 3)

IP addresses, DNS hostname, DNS domain-name, (FQDN), and default

DNS domain name. Usually set dynamically with DHCP. (Discuss many

“hostnames” issue: NIS, per system, NetBIOS, or DNS. Similar issues with

“domain-name”.) RH GUI tools get these confused and won’t always work.

How can you tell which hardware will work with your OS?

Proprietary Unix (not to mention Mac and Windows) vendors maintain hardware

compatibility lists. Some major Linux vendors do as well (such as Red Hat). However, many users send hardware

compatibility reports to various places that anyone can check, such as smolts.org or the ones

listed at linux-drivers.org.

System Clock

Traditionally, hardware generates a tick

event every so often; this is the CPU (and FSB if you have one) clock speed,

often 3GHz or more today.

The kernel maintains a software clock

known as the system clock with a lower resolution: every so many hardware

ticks, a software tick count is incremented. This counter is the system clock

used by the date command.

The tick frequency can be adjusted in

some cases to better match graphic card processing speeds (for serious

gamers). In Linux, this is controlled by the kernel value HZ, which in turn sets the

duration of a software clock tick called a jiffy. (See time(7).) On Linux, a jiffy is usually

4 mSec. (On Windows 7, it is 15.6 mSec.) (In short: the hardware generates

ticks, counted by the kernel, and every so many hardware ticks (depending on

HZ) a software tick event is generated and the system clock updated.)

A problem with this scheme is that the

CPU must handle a tick event every jiffy. Processors these days all have power

management capabilities. When they have no work to do, they can be put into some

low-power state, drastically reducing their power consumption. The more time

they can spend asleep, the less power they use. With this kind of processor,

that periodic tick is a liability. It means that even when the processor has

nothing useful to do, it will be awakened every jiffy just to update a counter

and then go back to sleep.

For some time now, most kernels support

tickless kernels, also known as dynamic tick. Linux integrated

support for this in 2007, and more recently OS X is also tickless. Windows 8

is tickless too. With a tickless kernel, instead of indiscriminately making

the timer fire every few milliseconds the kernel takes a look at all the timed

events outstanding. It then sets the timer for the earliest timed event it has

to wait for. So if the shortest wait the system has to attend to is 200

milliseconds, then the timer will be set for 200 milliseconds. This allows the

CPU to remain in the low power state longer.

Power-On Cycle

When the power is turned on, the CPU

starts executing a firmware program (often called BIOS) stored in nonvolatile

memory. (Newer systems have replaced BIOS with EFI, discussed later.) (FYI: BIOS

starts at address 0xFFFF0, not 0 as you might have expected.) BIOS varies by

motherboard brand. Often the term system firmware (or just firmware

alone) is used to refer to this BIOS/EFI firmware.

The first thing the firmware does is to

detect and test the hardware. After this POST (power on self-test),

the BIOS finds and initializes keyboard and monitor. (Sometimes, part of the

POST runs after the monitor is initialized, so results can be displayed.) The

CMOS memory (a type of volatile RAM backed by a battery) holds various

settings) is examined and the settings stored there are applied. Boot devices

(and some other hardware such as the bus controller, the keyboard controller,

etc.) are located and initialized.

Finally, the operating system kernel

must be found and loaded into RAM. With traditional BIOS and DOS disks, the MBR

of the first disk on the first controller is examined for a kernel loader

program; if not found then the loader in the active partition’s

boot blocks is used. If none is found there either, the disk is not bootable

and other disk drives may be tried.

In the past, BIOS has been

proprietary firmware provided by the motherboard manufacturer. OpenBoot

is BIOS defined by IEEE Standard 1275-1994, Standard for Boot Firmware,

and is used on Solaris SPARC and a few other server platforms. (See Solaris

console operations or the Solaris

OpenBoot Reference Manuals for more info.) For Linux, a group of

developers wanted a non-proprietary version and started the LinuxBIOS project,

now called coreboot. However, all of

this BIOS stuff has been displaced by newer firmware called UEFI (discussed later).

This firmware is executed immediately

after you turn on your system. See man pages for kernel, eeprom. The OpenBoot firmware can be configured for

interactive booting; it will pause for user instructions after the POST; the

prompt is “ok> ”.

Related to the power supply are the features suspend

and hibernate.

Suspend means to put

the DRAM (main memory) into a low-power state, and shut down as much of the

rest of the computer as possible. You can leave a laptop or desktop in

suspend, but it will slowly drain the batteries of a laptop unless plugged into

a recharger.

Hibernate saves

the content of your RAM to the hard drive using swap space, then shuts down the

computer completely. This feature is unavailable unless you configure enough

swap space.

Laptops can benefit from a hybrid approach that

saves memory to disk, then suspends. If the battery runs out, your PC can

resume from hibernate; otherwise it resumes from suspend.

The primary

tasks of the system firmware are to:

·

Determine the hardware configuration

·

Test (POST) and initialize the system hardware (Qu: What

hardware?)

·

Provide interactive debugging facilities for testing hardware and

software, for setting the hardware clock, and for changing CMOS settings

·

Boot the operating system from either a mass storage device or

from a network, using an OS loader (that the firmware searches for)

OS loader (or

boot loader or boot manager) is located by the

system firmware in order to load the actual operating system into memory. For

older BIOS/DOS disks, the loader is read from the MBR (Master Boot Record)

of the selected boot device, if present. If not, the firmware looks in the boot

block of the active partition for a loader (used with dual booting).

For EFI/GPT disks, the boot loader is

just a program that can be read from the disk, often called grub.efi or elilo.efi. (Not having to fit the loader into a single

disk sector is one advantage of EFI.)

A feature of UEFI is secure boot, which requires

the hardware check the digital signature of firmware, then have the firmware

check the digital signature of the kernel. Microsoft made a deal with some

hardware vendors to include only its public key in hardware.

To support Linux, Ubuntu and Red Hat have developed a

“shim” boot loader, signed by Microsoft, which in turn loads GRUB (the regular

boot loader). The Linux foundation has developed a pre-loader loader that is

similar to the shim loader, only it doesn’t check the signature of the full

loader (GRUB).

On some systems you can change a CMOS setting to disable

secure boot.

Some common OS loaders for Linux that

work with other systems as well (MS, Solaris) are LILO (and the EFI version, elilo) and GRUB (the most common today). loadlin.exe is used when HW must be

initialized from DOS drivers (very rare anymore). PowerPC systems use yaboot. Also common is efilinux.

An excellent source of information on boot loaders (and in

particular, for UEFI) can be found at rodsbooks.com.

For bootable removable media, such as

bootable CDs, DVDs, Flash, etc., the ISOLINUX boot loader

is often used. (It is related to other loaders from the same group, SYSLINUX).

To see which loader is in use for MBR

disks, run bootinfoscrypt:

sudo bootinfoscript --stdout | awk '/______________/ {exit}; {print

$0}'

GRUB is used directly by PCs using traditional BIOS boot.

However, some PCs do not support BIOS boot anymore, only UEFI boot.

The open source firmware Das U-Boot contains a partial

implementation of the UEFI specification. (A complete open source

implementation is offered by TianoCore EDK II. Companies like Phoenix offer

closed source UEFI firmware.)

On the 64-bit ARM architecture, Some Linux distributions

such as SuSE and Fedora use U-Boot to load GRUB as a UEFI application from

U-Boot. GRUB in turn loads and starts the Linux kernel via UEFI API calls.

Note Linux itself has an UEFI stub so it can be started as a UEFI application

on Intel x64 architectures.

Because the MBR or boot block is

limited in size (446 bytes for the loader code), BIOS-based OS loaders have two

(or more) stages. Only the first stage is in the boot block/MBR. This

software locates and loads the second stage loader from someplace, often the

unused space between the MBR and the first partition, or in the boot partition,

which can be a much larger program. (The initial stage is so small, it used

BIOS calls to read sectors using LBA addresses.) This first stage loads a

second stage, which may load another stage, which in turn actually loads the

OS, according to its configuration. With GRUB, the second stage is considered

part of the first one; both together are called the Grub core. That loads Grub

modules, usually filesystem drivers. Mention coreboot

(formerly LinuxBIOS), with a 3 second boot (!), mkbootdisk, uname

(and who -r).

Even before an OS is loaded, some firmware

may be available to control the boot process, perform remote management

functions, and various configuration tasks. This firmware is usually called LOM,

or Lights Out Management.

LOM is the standard system controller

for remote out-of-band management (often uses a separate, dedicated NIC) for

many types of servers and some Apple computers and PCs. The

hardware involved is usually called the baseboard management controller

or BMC. The BMC is usually a system on a chip (SoC) that you find

in various devices and smartphones.

LOM functions

include monitoring, logging, alerting, and basic control of the system. LOM is

particularly useful for remotely managing a server in a typical “lights out”

(loss of local power) environment, hence the name. (Note, the new UEFI

standard provides many of the features of LOM; both can be used if desired.)

Intel developed IPMI, an open

standard for implementing LOM, supported by Dell, HP, and others. You can usually get to that by powering up while holding

down the F12 key.

MEBx (Intel’s newest version of LOM) runs

digitally-signed code from Intel, meaning end users have no control over the

system. (Neither to virus writters!) This system can run even when the main

system is turned off (hence the term LOM) and can invisibly use your NIC if

there isn’t a separate LOM-dedicated one. The only requirement is access to network

IP ports 16992 and 16993.

Separate from LOM is a new (2010) Intel CPU feature. Trusted

Boot (tboot) is a pre-kernel module for Linux that uses Intel’s Trusted

Execution Technology (TXT) to check to make sure system files haven’t been

tampered with, before letting the system boot. (Windows supports TXT too.)

This offers protection against rootkits and other types of malware that try to edit

system files. (It also allows vendors to enforce DRM.) This is related to secure

boot.

Secure

Boot with Trusted Platform Module

The modern boot process is made more complex

(if that’s even possible) by secure boot features. To start with, many PCs and

servers today (2020) include hardware called a Trusted Platform Module

(TPM). Like the BMC, the TPM is a SoC, meaning it includes its

own CPU and firmware. The TPM typically is installed directly onto the

motherboard and uses either the LPC or SPI bus to communicate with the rest of

the system. There is a standard for TPM, ISO/IEC 11889.

The TPM includes a hardware random

number generator and various crypto keys in ROM, and can be used for several

purposes. The TPM can generate cryptographically strong hashes of the system’s

hardware and firmware, and can present that to external systems wanting to

validate the system (known as remote attestation). Finally, the TPM can

be used to encrypt/decrypt other data if asked. When hardware/firmware hash is

included in the process, the terms sealing/unsealing are used instead. This

ensures no data can be decrypted for any firmware or software unless the system

is in the exact same state as when it was encrypted.

Primarily the TPM boots first, even

before the BMC, and is used to validate its own firmware, then the BMC

firmware, the UEFI firmware, and all other firmware. Only when this is done is

the BMC released to run, which (as discussed earlier) holds the main CPU until

it runs its own checks. (EUFI also has its own security checks.)

Note that a TPM can validate the

firmware is the expected firmware, but not that such firmware is secure with no

vulnerabilities. Some companies therefore add additional hardware to augment

TPM functions. Some of these include Intel’s Boot Guard, Google’s Titan, and

Apple’s T2. (Apple’s solution prevents repurposing the computer to run

non-Apple software such as Windows, ChromeOS, or Linux.)

The whole secure boot process is much like a relay race,

where one team member passes a baton to another during the race. In a relay

race, the team members know each other but when booting a computer, each step

must be validated as the one expected, before control is passed to it.

The Boot

Loader

The boot loader need not be installed

on the same disk as the bootable partition. You can for example use a USB disk

to hold GRUB, which in turn could be used to boot the real disk. A USB drive

can also be useful when GRUB can’t access the real drive (e.g., with

non-standard SAN adapter hardware). Boot from the USB system instead.

“Live distros” on read-write media such as flash disks, can

also include some persistence; they need not be completely read-only.

The Sys V

Boot Process (discussed in detail later.)

“Boot” comes from bootstrap,

an old (Greek?) story of trying to lift oneself off the ground by pulling on

your bootstraps (shoelaces). Old days: set toggle switches on the computer to

enter instructions manually into RAM. After POST (some) hardware is discovered

by probing, which is then initialized monitor, keyboard, mouse, disk

controller, and disk. The OS loader is found, a small (<500 bytes) program

in the boot block, it loads the kernel and supplies some initial parameters.

Some Unix systems (those

not using standard Intel-architecture hardware) include extra steps. They ship

with extra firmware, which is like an extended BIOS, that usually

includes a diagnostic console where you can run limited commands to alter the

boot process. It also includes the boot loader, so you can pass kernel

parameters, boot into different run levels, etc. (For generic IA hardware, you

need a generic boot loader such as GRUB to provide most of that functionality.)

For IA, the boot process

uses BIOS to access and read the boot blocks of the disk (partition) containing

the loader program. The OS loader also uses BIOS to access the boot partition

to read various files including the kernel. Thus the boot partition must be

readable by BIOS, so no fancy FS types or exotic disk technology for that.

The kernel is loaded and

starts to run. One of its first tasks it to mount the root

partition. If the kernel doesn’t have the correct drivers compiled in to

access that type of disk and FS type, it won’t be able to mount root. Many

distros keep the kernel small by using loadable kernel modules (LKMs)

for various drivers, such as SCSI, LVM, etc.

But you can’t load LKMs

unless you can read them! The solution is to compile a kernel with the

required drivers, or use an initial ram disk (initrd or initramfs).

The kernel does have a driver compiled in for ram disks, so if a striped down

version of the root filesystem were put into a RAM disk image file in the boot

partition (“initrd-version.img”

or “initramfs-version.img”),

the kernel could read and mount that, load required LKMs, then destroy the RAM

disk and mount the real root partition.

Once the root

partition is mounted, the first and only process started in the boot process is

init. (A modern kernel

is organized as several independent internal or pseudo processes, shown

in [...] in ps output. The “/#”

indicate which CPU the pseudo process is running on.) init (according to its configuration file, often /etc/inittab or /etc/rc.conf) starts various

programs and scripts. The scripts perform such tasks as check for new

hardware, mount and check disks (with fsck),

clean /tmp, initialize

networking, etc. Examples of the daemons started are cron (and at),

mail, console (and serial port) connection-detection (getty/mingetty),

XDM, printing, database, xinetd,

SSH, etc.

A daemon is a service process that runs in

the background and supervises the system or provides functionality to other

processes.

The program to start can be

changed on the boot command line (init=path). This is sometime useful

to trouble-shoot your system: init=/bin/sh.

System

Shutdown

System stops all user

processes, stops daemons, syncs

inode tables to disk, and finally stops all processes and (hardware

permitting) turns off the power. Note some systems have a UPS that can

start/stop the system automatically.

halt,

poweroff, reboot, shutdown {-r|-h}

now. (Unix shutdown is slightly

different between vendors.) /etc/nologin

to prevent logins during lengthy shutdown; remember to remove when

rebooting. Linux supports using /var/run/nologin

instead, which is on a RAM disk and thus the file disappears after a reboot. /sbin/nologin and /etc/nologin.txt (the message shown by

/sbin/nologin) can be used as a

user’s shell, to prevent that user from logging in; there are other ways to do

this as well. Use the ftpshut

cmd to notify ftp users of impending shutdown (ftprestart

to cancel), which creates the file /etc/shutmsg

(default filename used). Other services that have remote sessions (such as

Apache) often have ways to prevent new sessions without dropping current ones.

At GUI login (XDM), there is usually

menu choices for reboot and shutdown. At console, you can configure the system

to allow halt, reboot and shutdown without a login

required. Note that at any time, CONTROL+ALT+DELETE

(aka the three-fingered salute, the Vulcan nerve pinch) will

reboot the system. This default behavior can be changed.

On Linux, a special combination of keys will perform

various shutdown and reboot functions, even if the GUI is frozen. Called the magic

SysRq keys, you hold down ALT+SysRq+<command-letter>. (The

SysRq key is often labeled “Print Screen”.) To safely reboot a frozen

computer, use this sequence of commands (remember to hold down ALT+SysRq):

REISUB. See Wikipedia’s

SysRq page for documentation. Note, only the left ALT key works for this.

Such commands are limited by default to

root and users at the console (via PAM). The shutdown

command also has a list of users allowed to use it from anywhere.

Never use the /etc/shutdown.allow file! It

doesn’t actually check if the user running shutdown is listed,

it checks if a user listed is logged in at the console. So if root is listed,

and some SA leave root logged in on the console, any user anywhere can shut down

the system!

GRUB

(Adapted from help.ubuntu.com/community/Grub2)

A new version of GRUB, GRUB2, is in use

on most systems today (2016) such as Ubuntu and newer Fedora systems. It differs

from GRUB (version 1, also known as GRUB legacy) in a number of ways:

·

GRUB 2 supports both BIOS/DOS disks as well as newer EFI/GPT

disks. (Fedora 14 and some older versions include a patched version of GRUB

legacy, that does support EFI/GPT very well.)

·

No /boot/grub/menu.lst

(or .../grub.conf). (/etc/grub.conf was a symlink to that

file.) It has been replaced by /boot/grub/grub.cfg,

which is not meant to be edited manually, even by “root”. The grub.cfg file is overwritten anytime

there is an update, a kernel is added/removed, or the SA runs update-grub.

·

The primary configuration file for changing menu display settings

is /etc/default/grub. There

are also the files (shell scripts) in the /etc/grub.d/

directory. While you can edit the existing files, to customize the kernel

options for instance, these files get rewritten whenever GRUB2 is updated. If

you want the same options used on all your menu items, you should edit the

default options list in the /etc/default/grub

file.

·

The files in /etc/grub.d

should all be executable shell scripts. When run (by update-grub) they append data to the generated grub.cfg file under /boot/grub. The placement of the menu

items in the GRUB boot menu is determined by the order in which these files are

run. Files with a leading numeral are executed first, beginning with the

lowest number. 10_linux is run

before 20_memtest, which would

run before 40_custom. If files

with alphabetic names exist, they are run after the numerically named files.

You can turn off any of these files by removing execute permission from them.

·

Custom menu entries should be added to the 40_custom file or in new files.

Based on its name, 40_custom

entries by default appear at the bottom of the menu. A custom file beginning

with 06_ would appear at the top

of the menu since its alphanumeric sorting would place it ahead of 10_ through 40_ files.

·

After making any changes to any of the GRUB 2 configuration

files, you must run update-grub

on Ubuntu and most other distros. On Fedora and RH-based distros, you must run

the command “grub2-mkconfig -o /boot/grub2/grub.cfg” instead. These commands update

the real config file used by the boot loader. No changes made in the

configuration files will take effect until this command is run. Also,

automated searches for other operating systems (such as Windows) runs whenever either

command is executed. (See also the grubby(8)

command, run automatically when the kernel is updated, to update grub.cfg directly.)

If you are booting using UEFI, a different config file is

used. Run:

grub2-mkconfig

-o /etc/grub2-efi.cfg

But if you are booting using classic BIOS

boot, run:

grub2-mkconfig

-o /etc/grub2.cfg

·

GRUB2 numbers partitions starting at 1 (one) instead of 0

(zero). Disks are still numbered from zero, so (HD0,1) would be the first partition on the first disk.

·

GRUB Stage 1.5 has been eliminated. Additional filesystem types

are directly supported by stage 1.

·

To find out where (which disk) GRUB 2 is installed, the user can

run the following commands: grub2-probe

-t device /boot/grub for the device and grub2-probe

-t fs_uuid /boot/grub for the UUID.

The

contents of the /etc/default/grub

configuration file are used by the shell scripts in /etc/grub.d. However, the configuration items can vary

between systems. Some of the options you may want to edit in this file are:

· GRUB_HIDDEN_TIMEOUT — If you don’t

comment out the *HIDDEN* items

the GRUB menu will not show. This is a number of seconds to display the splash

image while waiting for the user to hit the SHIFT key, to display the menu.

· GRUB_TIMEOUT — The number of seconds

to show the menu, if it is shown at all. Set to -1 to disable the timeout (and

thus wait for the user).

· GRUB_DEFAULT — Set this to a number,

to default to that entry in the menu to boot. (The first entry is numbered

zero.) Note that since the menu is changed if additional kernels or operating

systems (or if you add custom menu entries), the order of menu entries can

change unexpectedly. You can also set this to the word “saved” to boot the

most recently booted item.

· GRUB_CMDLINE_DEFAULT — This is set to

a string which is appended to the kernel line (passed as parameters to the

kernel), when booting in normal mode, for all Linux kernel menu entries.

· GRUB_CMDLINE — Appended to the kernel

line in both normal and rescue modes.

Many useful command-line options are found in dracut.cmdline(7).

See

the Ubuntu.com

wiki site for a complete list. Remember to run update-grub

(on Debian-based systems) or grub2-mkconfig

(on RH-based systems) after making any changes. The official GRUB

documentation can be found at gnu.org.

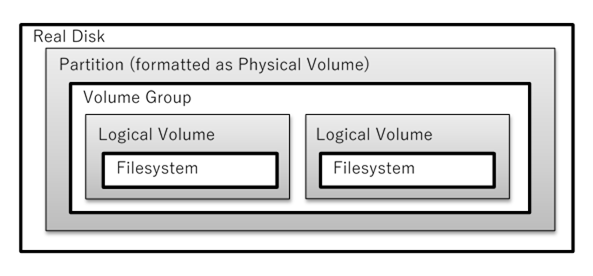

File Systems and Disk

Formatting — the Short Explanation

The term filesystem has several

meanings. One is the collection of files and directories on some host: the

whole tree from “/”. Another is

the type (structure of) a storage volume: ext2, FAT32, etc. Filesystem

can also refer the type of storage or its access: disk-based, networked, or

virtual. Today hard disks are by far the most used data storage devices and

an SA must know a lot about them.

Formatting a disk has two

meanings: a low-level format, done once at the factory

(but redo to map out bad blocks). Once that is done, you can partition

a disk (not generally considered “formatting”) into one or more storage

volumes.

Formatting a storage volume by

initializing it with some filesystem type is a high-level format.

Technically, “formatting a disk” only means a low-level

format and any partitioning or creation of storage volumes. A high-level

format is really “formatting a storage volume” but is is common to refer to

both tasks as “formatting a disk”. I prefer the terms low-level and high-level

format, but these terms are not standard.

A disk partition is a contiguous

set of disk sectors. Traditionally, partitions were on cylinder boundaries,

but since modern disks lie about the geometry you should ignore that. (SSDs

don’t have cylinders anyway.) A small table at the beginning of the disk lists

the partitions, including their start block and size (and other information).

The partition table is modified or

examined with fdisk (or

GUI equivalent) on all BIOS/DOS disk systems that have the partition table in

the MBR, as well as GPT disks (modern fdisk only). Other tools include gdisk (or GUI equivalent) for GPT

disks, or mdadm (or Solaris

metainit) to format software

RAID virtual disks; note modern LVM supports RAID directly. (Modern Windows

systems use diskpart for DOS or

GPT disks.) parted or the GUI gparted can manage either DOS

disks or GPT disks. (Demo fdisk -l; parted -l; on /dev/sda.)

(If planning to use ZFS, BtrFS, or

BetrFS, you generally use the whole disk (except maybe for a boot partition) as

a single storage volume, and use the filesystem features to create

“sub-volumes”. So no partitioning or logical volumes are used in such cases.)

Note that using gdisk on a DOS/MBR disk will

convert it to a GPT disk, with a protective MBR added as necessary.

High-level formatting (putting

a filesystem into a volume) is done with mkfs

(or a filesystem-specific tool such as format

or newfs).

Common FS types (discussed

and compared later): FAT*, vfat (pcfs or dos on some OSes; a.k.a.

FAT32), exFAT, NTFS, and soon, ReFS (Microsoft’s upcoming successor to NTFS).

Non-Microsoft FS types include UFS (Common to many Unix flavors; a.k.a.

FFS on some OSes), ZFS (Sun’s successor to UFS and open sourced), ext2, ext3,

and ext4 (current Linux standard), Btrfs (future Linux standard similar

to ZFS), ReiserFS, JFS (and JFS2), XFS, VxFS and freevxfs (SCO, Solaris, ...),

HFS, HFS+, HFSX, JHFSX (Macintosh), HPFS (OS/2). Note the safer FS types use a

technique taken from database technology known as journaling (discussed

later).

FAT (or NTFS) is used on most small flash

and other small removable media. Larger flash disks use exFAT or NTFS; but can

be formatted as any type you wish, just like any disk. A new (2012) choice is

the F2FS, or flash-friendly

file system. (See also F2FS

design notes.) MS also has a proprietary, patented flash filesystem called

exFAT, similar to FAT32. (In

2013, Apple licensed exFAT to allow easier file sharing via Flash drives.)

ExFAT doesn’t support journaling or permission, but add-ons to it may (e.g.,

TFAT).

For data CDs, iso9660 (Linux) a.k.a.

hsfs (Solaris, High Sierra FS). Default is “8.3” ASCII file names.

Rock Ridge extension allows long filenames and symlinks, Joliet

extension allows Unicode, and El Torito allows bootable CDs.

DVDs (and some CD-RWs) use udf (a.k.a. UDFS) or iso9660.

Note, extent-based FSes rarely

need defragmenting; they work well regardless until nearly full. A

defragmented FS will still perform better than a fragmented one; the difference

isn’t as large using extent-based FSes. (Fragmentation is discussed later.)

All filesystems suffer from data corruption. To check and

repair them, you use the fsck utility for that type of

filesystem. (See below for details.)

Device

Naming (and Partition Numbering) Schemes:

All hardware has a physical (or instance)

name, which shows its location in the device hierarchy. This is a tree-like

view. Each device is identified by its position on some bus; each bus is

connected to another bus, up to the top of the system.

Physical devices are found by probing

the buses to see what’s plugged into them. This information is collected by

the kernel and exposed through sysfs (Linux) mounted at /sys, or devfs (Solaris)

mounted at /devices.

The physical names are long and rarely

used. Instead, software uses the device logical names defined under /dev.

Internally the kernel uses instance names to

refer to devices, such as “eth0” or “tty0”. Solaris has a file, /etc/path_to_inst

to show which instance names map to which physical names. Linux has no such

file; you need to use various ls* commands and look under /sys. Often the error logs show only instance names.

Linux: /dev/sdXY for SCSI disks.

(Note X has no relationship to the SCSI ID.)

/dev/hdXY, X=a, b, ... for each IDE

controller slot., Y=1, 2, ... for each partition.

Linux now uses the SCSI code to

handle all disks, so “hdXY” no longer used.

/dev/sda4

= ZIP (zip always use partition 4 for whole disk).

/dev/fdX

= floppy X=0, 1, 2, ... (Note fd0H1440 for different formats)

/dev/ttySX,

X=0, 1, 2, ... serial ports

/dev/lpX

= X=0, 1, 2, ... parallel ports

/dev/loopX

= X=0, 1, 2, ... loopback device (for mounting image files)

/dev/sgX,

X=0, 1, 2, ... Generic SCSI devices.

(These include “fake” SCSI devices

such as USB scanners, cameras, joysticks, mice, and sometimes flash drives.

Some USB devices pretend to be SCSI disks and have sdX names instead.)

Modern systems use daemons such as udev to populate /dev. These can organize the

device names differently, usually in a deep hierarchy (find /dev -type d).

However, the traditional names are still provided as symlinks.

Some devices don’t traditionally get

names under /dev, such as

network devices that can’t read/write bytes (or blocks) at a time. This is

most commonly seen for Ethernet or PPP devices. On Linux, Ethernet devices are

traditionally named eth<num>. Some systems (e.g., Fedora since 15)

uses a new naming scheme, but the old scheme is still supported in many cases

(e.g., virtual machines), or by passing parameters on the kernel command line

(with GRUB).

FreeBSD

Device Names

BSD names devices after the

device driver used: /dev/acdn for IDE CD drives, /dev/adnspl for ATA and SATA (IDE)

disks (n=drive number, p=slice (a.k.a. DOS partition), l=BSD

partition letter (a.k.a. Solaris slice). /dev/cdn

for SCSI CDs and /dev/danspl

for SCSI disks. As on Solaris, BSD partition a is for the root slice, b

is for the swap slice, c is for the whole disk. As with Solaris, there

are 8 BSD partitions (slices) per slice (Linux partition); letters a to h.

Solaris

Device Names (for Solaris 10 and older, when not using ZFS):

Actual device names are in /devices (the Solaris equivalent of /sys) but uses links from /dev.

Solaris has software RAID

using “md” virtual disks. To

see what disk /dev/md/dsk/d33

really is: metastat .../d33.

The

naming scheme for Solaris disks is:

IDE: /dev/[r]dsk/cAdBsC (A=controller#,

B=disk#, C=slice#).

SCSI: /dev/[r]dsk/cAtBdCsD

(A=controller #, B=SCSI-ID (device #), C=LUN #

(usually zero), D=slice #) and /dev/sdnx

(n=disk num, x=slice letter). Solaris historical partitions:

a=root, b=swap, ...

Example: /dev/dsk/c0t0d0s1 or /dev/sd0b.

On x86 systems, Solaris uses “pP” instead of “sD” in the name to refer to

partitions rather than slices; “P”

is the partition number (zero means whole disk). p1 to p4

are the 4 primary “fdisk” partitions, P

> 4 refers to logical partitions. Ex: c0t0d0p2.

/dev/lofi/X

= X=0, 1, 2, ... loopback device (for mounting image files)

How GRUB

Names Devices

The floppy disk (if any) is named as (fd0) — first floppy. GRUB can

only reference a single network interface as (nd),

and this is almost always the interface the system firmware probed and

configured via DHCP. It is also possible to configure a network interface by

booting GRUB from floppy or other local media.

Hard disk names start with hd and a number, where 0 maps to the first disk found by

the BIOS, 1 maps to second,

and so on.

A second number can be used to specify

one of the partitions identified by fdisk

or gdisk (starting with 0 for GRUB legacy, or 1 for GRUB2). So (hd0,1) would be the first partition

on the first disk.

(hd0,5)

specifies the first logical partition of the first hard disk drive. The

partition numbers for logical partitions are counted from 5 regardless of the

actual number of primary partitions on your hard disk. Note in GRUB1, it would

be (hd0,4) instead.

Also, remember EFI/GPT disks don’t have

extended or logical partitions at all. they are just number from 1, up.

(hd1,a)

Means the BSD “a” partition (slice)

of the second hard disk. If you need to specify which PC slice number (i.e.,

fdisk partition number) should be used, use something like this: “(hd0,1,a)”. If the pc slice

number is omitted, GRUB searches for the first pc slice which has a BSD “a” partition.

Controlling

the System Boot Process

At boot loader prompt (LILO or grub

for Linux), you can control how the system boots up by choosing the run

level and setting other parameters. For disaster recovery (e.g., you

corrupted some critical files in /etc),

you must boot into run-level 1, called single user mode (or

sometimes maintenance mode or rescue mode). At the LILO prompt,

enter the word single (or number

1, or the letter S) after the name of the OS to boot:

LILO: linux single

If using GRUB, you add single (or 1) to the end of the kernel (or for GRUB2, linux) line. Demo using GRUB.

Fedora also supports an “emergency” mode that starts a

shell on the console, without running any other initialization scripts.

This is not usually what you want. Usually, you want “rescue” mode. There is also a confirm mode, which makes you type

y or n to run or skip service startup (or c to continue without confirming the

remaining steps).

You can also use a digit to specify the

run-level to boot. (Digits work for the systemd and upstart init

systems as well, although those init systems have other parameters to control

the boot process.) The default run-level is listed in /etc/inittab for Sys V init, and elsewhere for upstart,

SMF, systemd, or other init systems. Note “1”

is a synonym for “single user mode”.

If running Red Hat Linux, you can

control whether or not to use XDM (GUI). If the default is to use XDM and

today you don’t want to, enter linux

3 at the boot loader

prompt. If you normally don’t run XDM but today you wish to, enter a 5 instead. Different *nix systems

will use different values.

Non-SysV systems (such as *BSD ones)

don’t provide as many run-levels, only single-user mode and multi-user

mode. Because of this, there is no inittab

file and no directories named after run-levels. You can’t control if XDM

starts by specifying a run-level as shown above. There is no telinit program either; you use reboot, halt,

and shutdown, or type stuff on

the console while booting to determine run-level. BSD does have “security

levels” but that is different. See the BSD man pages for init and rc for details.

Modern versions of LILO and GRUB have

GUI boot loader programs. To get to a LILO: prompt, type CONTROL+X.

If using GRUB, hit ‘e’ to edit the command line, which in

GRUB will show a secondary window with several lines in it. Move the cursor to

the kernel (or linux) line and hit ‘e’ again. Now you can add the word single (or the digit 1) to boot to single user mode, or a

number to boot to that run level. After you hit enter, you will see the

modified command line showing. Hit ‘b’

(GRUB2: ^X) to boot.

The kernel parameters are documented in

man bootparam (which may be out of

date) and in the kernel-parameters.txt file, part

of the Linux kernel documentation. Generally, these parameters are used to

over-ride hardware parameters such as IRQ, DMA, I/O, and other settings. You

can also pass parameters to parts of the kernel or to init, to configure

various features (such as the console initial video mode: #line, font, ...).

Two options to consider for Linux are “quiet”, which suppresses kernel

log messages, and “audit=1”,

which will enable audit log messages. (This flag is undocumented, perhaps

because auditing is enabled by default in Linux now.) Run “auditctl -s” as root to check the current status.

In addition to kernel parameters and an

init parameter (the run-level),

additional parameters can be placed on the boot line. The list of these varies

by system and is generally not documented in any one place.

The parameters used to boot the system can be read from /proc/cmdline. These are

processed by the kernel, init,

and the boot-up scripts and commands. The ones recognized by the kernel and init should be documented in the man

pages (or someplace). For Sys V init systems, you can run this:

grep cmdline /etc/rc.d/{,init.d/}*

to see the parameters you can set on your system, that boot

scripts will use. (Note that systemd and upstart systems use

different files.) Systemd documents these in the man page kernel-command-line(7).

One common change to remove is “rhgb”

for Red Hat Graphical Boot, to boot much faster, and display more

diagnostic messages, by not stating X until later.

Some Fedora and other Linux distros use the plymouth graphical

boot instead. Unfortunately, the sequence of

switching from text mode to rhgb’s X server to text mode to

the display manager’s X server can cause significant screen flickering. Another

major drawback of rhgb is that boot messages

are not logged.

The main objective behind Plymouth is to provide a flicker

free system booting experience; as Ray Strode put it, “the ugly details of boot

up” are hidden behind a graphical (and possibly animated) splash screen. A

secondary objective is to log the boot sequence. Plymouth is designed to work

on systems with direct rendering manager (DRM) kernel modesetting

(KMS) drivers. If you have that, there is no flicker. Otherwise, plymouth

defaults to normal X rendering (and thus flicker) or a text mode.

Plymouth graphical boot is usually enabled by the “splash” setting in GRUB. Fedora (and probably RH) only have

plymouth, so that setting isn’t needed; just list rhgb on the kernel line. You

can download and use many graphical themes for plymouth. (To themes, use the plymouth(8) command.)

If rhgb is on the kernel’s command

line, rhgb (plymouth) is started early in the boot process. rhgb starts an X server for display :1

on a virtual terminal, so that it avoids conflict with the regular X server

which may be starting for display :0 on another virtual terminal. It

also creates a Unix domain socket (/etc/rhgb/temp/rhgb-socket), so

that boot scripts can communicate with it. As boot scripts execute, they can

use rhgb-client to send messages to rhgb, which then updates the text

and progress display.

When the system is finished booting, rhgb-client is invoked with the –quit option to send a terminate

request to rhgb. The user is then switched to the X server used by the

display manager. All the messages sent to stdout by the daemons as they start

are dumped into boot.log.

Red Hat (and some other) Linuxes: /.unconfigured or /etc/reconfigSys, or ‘reconfig’ is on kernel (linux)

boot cmd line.

Solaris: /reconfigure (or

from PROM cmd line: ok boot

-r). (There is also

a RH sys-unconfig command, and

similar commands for other systems.)

Install CD #1 can be used as rescue

CD to boot to single user mode. Boot it, then mount the faulty hard

disk partitions and fix them. (Works for Solaris too.) You can also try “init=/bin/sh” boot loader option. It

is probably better to try to boot in emergency

or rescue mode instead.

There are several “live” distros designed expressly for

system repair by sys admins, such as Grml. If

you have that on a CD or flash drive, you can use it to rescue systems.

Dracut

Dracut is used to control

the initial part of the kernel boot process. Typically, this involves

maintaining RAM disk image files. To make an updated boot RAM disk image

file for the currently running Linux kernel, use the command:

dracut /boot/initramfs-$(uname -r).img

$(uname -r)

To generate a new image

for a particular kernel version, specify that version; “uname -r”

reports the current version. For example:

dracut

/boot/initrd-3.4.2-31.img 3.4.2-31

The older command was

named mkinitrd; if your system

doesn’t have dracut, see if it

has the older (or a different) tool.

When you’ve made changes

to the kernel, or updated kernel modules, or updated some critical

configuration files in /etc (for

example, /etc/fstab), you will

need a new image file. New image files are only created automatically in

some cases, such as when installing a new kernel. (And since Fedora 21,

not always!)

Solaris doesn’t have this issue. At shutdown time,

the system always regenerates the boot disk image file automatically if any

changes were made.

In other cases, you

must generate a new image file manually. To do so for all installed

kernel versions, run:

dracut --regenerate-all

The dracut code that runs at boot time supports some extra

kernel parameters. These are documented in dracut.cmdline(7).

One of the most useful is rd.break, which can drop you down

to a shell at different stages (breakpoints) of the boot process. Use grep 'rd.?break' /usr/lib64/dracut/modules.d/99base/init.sh to find the breakpoints supported by your dracut version.

Then edit the boot

loader’s config file (grub.conf)

and add this line:

initrd

/initramfs-2.6.31.12-174.2.3.fc12.i686.img

An initramfs image

used by dracut (and similar systems) is a gzip compressed “filesystem”; really

it’s just a gziped “cpio”

archive file! The system doesn’t “mount” these images; it just extracts

their contents into some already created (and mounted) ramdisk.

Once the ramdisk is

populated from the image, the script /init

(or /linuxrc) runs if

possible. This can be used to load USB or SCSI drivers for such CD or

floppy drives. (Show how to examine: # gunzip -c /boot/initrd... >/tmp/initrd.cpio; mkdir /tmp/img; cd img;

cpio -i <initrd.cpio; less init)

You can view the

contents of these with the command lsinitrd.

Newer Linux systems have a type of ramdisk (“initramfs”) called “rootfs” that is always

mounted. It is used to ensure there is always something mounted (so the

kernel doesn’t have to check for an empty mount list). rootfs is also used during booting of a Linux kernel, used as the

initial ramdisk. When the real root FS is ready to be mounted, rootfs is

then emptied of files (to free up the RAM). The system switches to the

real root filesystem using a command usually called switch_root or pivot_root. The new root is

mounted right on top of rootfs.

Lecture

3 — Linux Install, Upgrades, Solaris Notes

Get Linux/Unix: http://distrowatch.com/.

To be demonstrated in-class, then

done individually. You will document every step in your system journal.

(Discuss the importance of keeping a journal.) Make sure your journal

has sufficient details so that someone could recreate your system exactly,

without guessing what you did.

Before installing, make sure your

firmware is configured acceptably. For example, UEFI or BIOS. (Note that

running the installer with UEFI/BIOS means the resulting system must be booted

under the same system; you cannot change that later without reinstalling.

Boot the GUI installer for Red Hat and

Fedora, called Anaconda.

This provides multiple terminal windows via “tmux” with various information and

a root shell console, you can use for trouble-shooting your install. See the

anaconda guide above for the keyboard shortcuts you can use to switch between

the consoles.

To switch from the graphical

installation environment to tmux, press Ctrl+Alt+F1. To go back to the main

installation interface which runs in virtual console 6, press Ctrl+Alt+F6. (Once

in tmux, use ^b,1-5 to switch consoles. For example, ^b then 2. 1=main

window, 2=shell prompt, 3-5 various log messages.)

During the install, keep track of

every choice you make. Either write down each choice as you go, or you can

use a feature of the Fedora Anaconda installer: hitting SHIFT+Print_Screen

will make screen-shots and will save them in /tmp/anaconda-screenshots.

/tmp is a RAM disk during the

install, so your screenshots will be lost when you reboot. Before

rebooting, save your screen-shots to your home directory. (Use ^B,2 to get

to the shell, then use cp.)

All installation choices can be changed

later, they are stored in /etc/*.

Also, all your choices are saved in a file, /root/anaconda-ks.cfg,

although that file may not be very easy to read.

Ask about any install choices you don’t

understand. Don’t be afraid to install more than once.

(Instructor’s workstation

configuration: System Settings-->Hardware-->Displays and monitors;

confirm the Dell monitor is primary, and both monitors have same pixel settings

(1029x768); click “Unify outputs”. May need to reboot, but make sure settings

are saved first!)

It is possible to rerun parts of Anaconda later, if you

muck up your system and don’t want to reinstall. Try running the command “systemctl enable initial-setup-graphical.service”, and then reboot. If you don’t have that, try after

installing the package initial-setup.

IaC —

Infrastructure as Code

In large data centers, new servers are

deployed in large numbers (hundreds to thousands). It would be impossible to

maintain accurate system journals for all of them! While system journals

remain a good idea in other situations, for such large-scale operations a

different approach is needed.

In such situations, tools are used to

actually build and deploy new clusters of virtual machines or containers.

These tools can take a description in a text file and use it to install,

configure, test, and deploy servers. Even the network between the racks of

servers is virtualized today (software defined networking), and any

required changes for that can be described in text files too. Tools such as

Puppet, Chef, Ansible, SaltStack, Terraform, and Vagrant can read configuration

description files and do the rest automatically. (You will learn more about

these tools in a future course.)

When deploying a new service or

updating (or retiring) an existing one, the system admin edits these files.

What has this to do with system journals? Well, these files precisely define

exactly what was done. So instead of human-readable journals, you write

machine-readable ones so the steps can be automatically repeated.

The practice is to use versioning

software such as Git to record every change to these files, including when, by

whom, and why (in a commit message). The versioning allows you to see

differences between any two points in time or to see the history of changes.

For this and other reasons, SAs today

need to have some software development skills; creating and editing these files

is similar to writing software.

Pre-Install

Questions to Ask (and Answer)

When planning a new system deployment,

where do you start? Some questions to consider include:

What is the purpose of your system?

Will a single system be enough (or SMP or cluster)? Should you spend the money

on SCSI, RAM, big disks, ...? Will you be building a network (such as a SOHO

or larger)? What kind of network to get (if any)? Where will you put the

equipment (a server closet a.k.a. data center)? What kind of

racks to get? Wire supports? Other equipment (monitor switches, carts, testers,

and monitors, ...) UPS? HVAC? Fire suppression? Security? What sort of

remote administration should be used (KVM, serial console server, ...)? How

many similar hosts must you administer?

Capacity

planning:

Some vendors will tell you to buy a

large number of servers, run the system awhile to see how it performs, and

return the unneeded servers for some sort of refund. There are other ways to

estimate accurately the number of servers required for some service(s) to run

and provide a required average response time (sometimes other performance

requirements too). These include:

·

Build a prototype system (or use the current one if any), measure

performance and response time, and extrapolate the required resources.

·

Trust the vendor to only sell you what you actually need.

·

Estimate the resources needed for each part of the system (web,

DB, email, etc.) and sum these values to obtain an estimate of the whole system

requirements.

·

Calculate the value needed based on the factors of the servers

you are considering: number, type, and speed of CPUs per server, amount and

speed of RAM and disk system, network bandwidth available, and the system’s I/O

performance data.

·

Hire an experienced consultant who does this sort of planning.

Speak with former customers (from a year ago) to see if they were pleased with

the results.

Without experience, the required

mathematical skills, or a huge budget to blow on consultants or needless equipment,

you can use queuing theory to estimate accurately the number of

servers required to provide some specific response time, by plugging in a few

values and graphing the result. There is a formula from Dick Brodine of

National Computing Group (DickBrodine@juno.com),

reported in the July 2006 IEEE Computer (“Mathematical Server Sizing”, pp. 91–93).

This formula is based on one by Kishor S. Trivedi in his book Probability

and Statistics with Reliability, Queuing, and Computer Science Applications,

(C)1982 Prentice-Hall. Trivedi’s model was designed to estimate response time

from a number of factors, assuming a single server. Brodine extended this

formula to measure response time from a cluster; by producing a graph of

response time versus number of servers (once you provide some estimates of

expected load and other data). Brodine hopes to sell his work so the sizing

tool he created is not available to us.

“Wikimedia’s entire collection of web sites—which includes

Wikipedia, Wikisource, Wikiquote, Wikinews, and several others—serves up

roughly 10 billion page views per month. At its peak, traffic can sometimes

reach 50,000 HTTP requests per second. The organization’s hardware budget to

date is roughly $1.5 million, and it spends $35,000 per month on bandwidth and

physical hosting [of over 200 servers]. Its entire technical infrastructure is

managed by a small IT staff consisting of only four paid employees and three

volunteers.”

Source: Wikipedia

adopts Ubuntu for its server infrastructure, Ars Technica post by Ryan

Paul, Published: October 09, 2008.

Post-install Tasks &

Procedures

(Remember to record any changes in

your journal!)

Most of these steps are performed by the installer

(Anaconda for RH, Ubiquity for Ubuntu). Historically, many installers don’t do

all these steps, or don’t do them in sensible or standard ways. You should

check all these items just to be sure they are set the way you want. (Note

we won’t learn how to do many of these until later!)

If you find some step that wasn’t done the way you like,

you can always change it, but think twice first, since other installer software

(and other administrators) may expect defaults settings and pathnames to be the

way the installer set them.

Note that many of these tasks are complicated and

inter-related, and will be discussed at length later in the course.

Remember to record all changes in your journal!

Read the release notes that

accompany your system. Perform any configuration tasks required. In addition,

note any changed/removed/added systems; you may need to update your system

procedures documents as well as any system documentation.

Configure the boot loader. You

will likely want to add or remove kernel parameters. Most boot loaders

(including GRUB) have an adjustable timeout for displaying a boot menu. On

systems where timeout = 0, you

will not be able to interactively boot the system! Consider changing this to 2

or 3 seconds.

Set HOSTNAME

(with static IP; rarely used with DHCP

since name associated with IP address not hosts. RH tools bad.) Discuss

static vs. DHCP, and DNS names. Also may need to set the system nodename

and host ID. (See networking below.)

Setup and run yum (or dnf) update

or non-Red Hat equivalent (Solaris: smpatch,

Debian: apt-get dist-upgrade; run apt-setup first), or use aptitude. Warning: Do not

physically connect computer to an untrusted network until this is done! (Of course,

this could be a catch-22 situation.) Updating a kernel may be difficult and

tricky.

Setup and verify networking.

Verify networking with ifconfig,

ping, route, and netstat.

You may also have to configure PPP or PPPoE.

Create the directory hierarchy for

locally installed software: /usr/local/{bin,lib,man,etc,src}

(or: /opt, /etc/opt, ...)

Set PATH,

MANPATH. Make sure these

point to standard directories for your system, such as /usr/local, /opt/*/bin,

/usr/usb, /usr/lib64/lsb, ..., for PATH and /usr/dt/share/man, /usr/openwin/share/man,

and /usr/sfw/share/man for MANPATH. Note the preformatted man

page location varies; for Red Hat it is in /var/cache/man.

The unformatted (“raw”) man pages are usually in either /usr/man or /usr/share/man,

and local man pages are usually put into /usr/local/man.

Other standard directories for some systems include /opt/*/bin and other places.

The default PATH setting rarely includes

every directory with applications in them. For Solaris the default PATH is /bin:/usr/bin. Some commonly

used “bin” directories can be added to the PATH. This is

actually a problem with Solaris because the default PATH omits many of the locations where software resides.

Here’s a sample PATH for Solaris: PATH=~/bin:/usr/local/bin:/usr/xpg4/bin:/bin:\

/usr/sfw/bin:/opt/SUNWspro/bin:/usr/bin:\

/opt/csw/bin:/usr/ccs/bin:/usr/X11/bin:\

/usr/dt/bin:/usr/openwin/bin

You should consider adding to the default PATH but the order matters! Many *nix systems support

multiple versions of commands including platform (i.e. hardware) specific

versions. Also (for old Solaris) POSIX versions are in one place (/usr/xpg[46]/bin), Gnu in another (/usr/sfw/bin), community software

in another (/usr/opt/csw/bin), and so on. See filesystem(5) for a list.

No one setting of PATH will satisfy

all users’ needs! One way to deal with

this is to have ~/bin (and/or /usr/local/bin or /opt/bin) first on the PATH, and to put symlinks in

there to the preferred versions of commands that wouldn’t otherwise be found on

the normal PATH.

Set the default time zone.

For Linux /etc/localtime

should be a copy (or preferably) a link of a file in /usr/share/zoneinfo/*; see also the man page for zic on Linux. (On some Unixes

you must set the environment variable TZ

for each user; for our time zone the proper setting is “EST5EDT” (or an alias such as “America/New_York”). For Solaris x86 you set the

timezone of the hardware clock in the file /etc/rtc_config.)

Make changes to the default environment.

These must be put into the system-wide login scripts for each shell. Check in /etc/login, /etc/bashrc, /etc/profile,

/etc/profile.d/*, etc.

Some changes to consider include: setting the default umask, un-colorizing ls, adding some standard aliases,

shell functions, and environment variables, and changing the default prompts

(traditional: users get “XXX$”,

root gets “XXX#”), and set the default

language (LANG variable in /etc/sysconfig/i18n is often wrong and

messes up man pages).

Edit /etc/motd and /etc/issue

and /etc/issue.net.

The issue* files contain the

prompts seen before the login prompt, and motd

(“Message Of The Day”) is seen just after a successful login. Motd is often

used for legal notices, for example “Unauthorized use of this system...” (but

can also be used for notices to users such as “Company picnic on Friday!”.)

This type of legal notice goes by different names such as AUP (Acceptable

Use Policy) or UCC (User Code of Conduct).

Legally, it would be best if the issue* file displayed before the login prompt. While your system

attempts this, if using PuTTY the issue* file is displayed after

the login prompt (but before asking for a password). This is because PuTTY

displays its own login prompt before trying to connect to the remote system.

The default issue* files identify the type and version of your

system. This is a security hole and should be changed to a legal notice or a

simple “Welcome to the FooBar system” message, or removed completely. (Note:

On some older versions of Red Hat Linux it is not possible to edit the issue* files directly as they get

recreated from a shell script on every reboot. This should be fixed too.)

With these files you can also perform

various cursor movements, set colors and text attributes (underline,

reverse-video, ...) by embedding escape sequences. The Linux (and most

versions of Unix) console drivers support a standard for this (ECMA-48). Some

of these codes are also supported by xterm

tools such as PuTTY. See the man page for console_codes

for details.

The issue

file (but not necessarily issue.net)

also support some backslash escapes that get substituted for system

information; see the various *getty

man pages (for Linux, see mingetty)

for a list of these.

Configure email aliases, especially

for root which should go to a human, the SA. Many systems do not

come configured with standard aliases, such as hostmaster,

postmaster, webmaster, abuse, etc. Some of these are required by various

standards. You need to ensure all aliases get resolved to an actual human.

Edit /etc/fstab

and make sure it has entries for all your partitions including Windows

partitions (if the computer is dual-booted), NFS mounts, and removable media

drives. (Note: A Modern GUI system uses HAL to auto-mount removable media

(under /media or /run/media), but only if no entry

exists in fstab!)

After installing the system and the

updates and additional software, build or rebuild indexes with mandb (formerly called makewhatis) and [s]locate

-u. slocate is the secure version of locate that (like find)

only shows stuff the user has permission to see. Use the -e option to exclude directories you

don’t want indexed (such as Windows partitions or the mount points for

removable media). Verify these will run automatically from cron. See crontab files in /etc/cron*.

Setup security: Set a system-wide

default umask. Create

any needed groups (/etc/group).

Other security tasks (and configuration files) you should consider changing

include configuring: fstab mount

options (nodev, nosuid, ...), PAM (/etc/pam.d/*, /etc/security/*), TCP Wrappers (/etc/hosts.{allow,deny}),

configure printer access (/etc/hosts.lpd,

/etc/lpd.perms, or /etc/cups/cupsd.conf), configure

and verify firewall (iptables

on modern Linux), and check default permissions of standard directories

and any added user accounts.

Configure SELinux (or Solaris

zones). (For now, edit /etc/selinux/config

and change “SELINUX=enforcing”

to “SELINUX=permissive”.)

Set the root (and other admin)

passwords. Any passwords you create or change must be recorded. However,

the system journal is not the place for that! (It is not a secure

document.) The common solution is to put passwords in a sealed envelope. Only

the officers of the company can open this envelope, which is usually kept in a

safe deposit box at a local bank.

Adjust user account defaults (/etc/login.defs on Linux or /etc/default/login on Solaris). Adjust

the default values for grace period, expiration date, etc.). Add/remove/edit

the files in /etc/skel. (*BSD:

see mtree(8).)

Setup ssh (/etc/ssh/sshd_config,

...) and disable telnetd and ftpd.

Turn off unused services. The

files /etc/inetd.conf (and /etc/xinetd.d/*) control which daemons

to run such as ftp, telnet, ssh,

databases (such as Oracle, MySQL, Postgres), etc. Turn off any you don’t need

and configure the rest individually (web, mail, ssh, ...) Use TCP Wrapper (tcpd) for added security. Note modern

Linux systems don’t use xinetd anymore; you turn these off from systemd.

Verify and set up /dev entries for your hardware.

The installer should have auto-detected your hardware but it may not find all

PCI devices (such as PCI modems), or ISA devices. You may have to configure udev (via udevadm) or some similar sub-system, instead of directly

editing special files in /dev which

can be done like so:

cd

/dev; ls -l ttyS*; man mknod; mknod

ttyS4 c Majr minr

ln -s /dev/ttyS4 modem

Hardware may need configuration

and usually there are tools to do this. Look for tools such as sndconfig, netconfig, modemconfig,

etc. (In RH see {system,redhat}-<tab><tab>

for lots of such tools. Demo.)

Configure mtools to map

drive letters for your disk, flash, ... drives.

Create additional user accounts

as needed.

Install and configure extra software:

Sun Java, Adobe Flash plug-in (get.adobe.com/flashplayer),

Audio/Video codecs (www.xiph.org), etc.

This often means adding additional software repositories such as rpmfusion. You may need to install various

packages to perform some tasks. For example, install redhat-lsb in order to use various LSB commands. Fedora

20 doesn’t come with any MTA installed, so if you want any email access,

you need to install Postfix or some other MTA. Note, any software not

installed by default that you want, can be installed at any time.

Configure servers: web, mail,

etc.

Create and initialize any required

databases.

Configure logging (/etc/*syslogd.conf). Note, Fedora may

not install syslog (or rsyslog) by default; see journald and journalctl.

Copy the install log (often

found in /tmp or /root).

Backup working configuration

(all of /etc at least).

Setup and configure additional

security measures such as log file monitoring (logwatch) and intrusion detection systems (tripwire). (You should try to protect

all directories with IDS except for /home,

/var, and all tmp directories.)

Configure crontab and anacron

(or periodic). See all the crontab files in /etc/*cron*. Cron jobs take care of rotating logs,

installing updates (if applicable), or anything else system or application

specific, that requires periodic maintenance. Automate as much as possible.

(Note many of these tasks are now done via systemd timer units.)

Add some monitoring solution (Nagios /

Zabbix / you name it). You don’t want your server to get stuck just because one

of disk partitions got full.

Other tasks.

Documentation

Create checklists for complex tasks,

stuff you don’t like to do (so you can delegate or take a vacation or get

promoted). Examples: new hire, termination, new server install, ...

In the past, such documentation was

pure text, or possibly created with nroff (the formatting used for man pages).

Today, it is more common to use Markdown formatting in

blogs and forum posts, wikitext,

and HTML. Sysadmins should have some familiarity with these formats.

Maintain a wiki.

(Or a CMS (Content Management System) for an IT portal.)

There ought to be a place where

everyone involved can find out the following at least:

·

What business function is the server associated with?

·

What group is responsible for maintaining the operating system?

·

What is the acceptable use policy for this server?

·

Are there other licensed products on the server and what is the

licensing agreement information?

·

What is the support information (includes backup schedules, time

to restore, and the procedures to request services)?

·

What is the hardware support contract number?

·

What are the software support contract numbers?

·

When do the support contracts expire?

·

Are there any packages that have been compiled on the server and

if so, document the procedure used to compile them (source, version, make

flags, etc.)?

·

Is the machine physical or virtual? If it is virtual, what is the

visualization technology and who is responsible for maintaining the host

server? (It is handy to also list the VM machine name, which is often random

and different from the host name. Once at HCC, one of the VMs was lost since

the users only knew the host's name, not the VM name. In the end, we shutdown

one VM at a time until the right one was affected. This caused a lot of

disruption as you can imagine.)

·

Is there any custom software on the server and if so, who wrote

it and or supports it?

·

If it runs any service that uses public-keys and/or certificates,

where are the keys stored, when do they expire, where is the password for the

private keys written down (and who has access), and who is responsible for all

that?

·

Are there any "extra" steps that need to be completed

before / after a reboot?

·

What non-standard ports are open and why?

·

When and how are backups done?

·

What IP (network parameters) information is associated with the

machine?

·

What standards, laws, or regulations apply to the host, when was

the last compliance audit done, when is the next due, and who does them?

·

If the machine is physical, what is the exact location of the

server (rack and shelf numbers)?

Basically, if your predecessor was

suddenly unavailable, what information would you need to immediately take over

support of the server seamlessly? That’s what should be documented.

Summary

Before placing a server (physical or

virtual) into production:

1. Configure

2. Harden, secure,

and patch

3. Document

everything

4. Test the server

5. Implement a

backup process (and test it)

6. Implement

monitoring (and test it)

System

Deployment and Initial Testing

Once the system is basically setup, you

should test it before placing it into production. This is especially important

for new hardware (which will sometimes fail at once; if it doesn’t, it usually

means the hardware is good). Virtual hosts need testing too, but not as much.

In addition to exercising the hardware,

you need to make sure your new host is secure enough for the environment it is

going to be in, and that is has enough resources (memory, could, disk,

bandwidth) to make sure you can fulfill the SLA (Service Level Agreement).

Before anything goes into production it