In the output of ls -l, the 11th column (the first after the permissions) is normally a space. But it can be some other character to indicate the presence of ACLs (a plus), SE Linux labels (a period), attributes, or extended attributes. The characters are not standardized. For the Mac OS X Unix, the output of ls -l includes:

@ — the presence of extended

metadata, see it with “ls -@”

+ — the presence of security

ACL info, see it with “ls -e”

The mdls(1) command might also be if interest for another view of the metadata. The metadata is stored in a file that begins with ._ (dot underscore) and then the normal filename. So the metadata for file.txt would be found in ._file.txt.

To change your password at any time, use the interactive passwd command (see also pwgen and apg; not standard programs). (Short of writing a C program, there is no standard way to non-interactively set passwords, so you can’t batch add user accounts! There are non-standard ways, however.)

script — saves a transcript of your shell session to a file. Filter (some) unprintable stuff with col command.

screen — Start work in class, detach session, go home/work, attach session, continue working! Also supports logging (transcript) of the session to a file.

Getting help — man pages and other resources

A man page (or manual page) is a quick reference sheet for some topic. Man pages are organized into sections of related topics (user commands, administrative commands, file formats, etc.) If some topic has a man page available, it is often written with the section number in parenthesis, like date(1) or reboot(8).

Not all systems have identical sets of man pages (because not all systems have identical commands). Even the number and purpose of sections can differ between systems. For example on Solaris an administrative command is in section “1M”, so you’ll see a reference to reboot(1M) on that platform. On Linux section 8 is used.

A given topic may have multiple meanings and thus appear in several sections. To see a list of available man pages for a given topic, plus a brief description, use man -f word. This is equivalent to whatis word. The word may be a topic or any word in the brief description of a topic. (Try whatis open.)

To view a particular man page when several have the same topic, you need to specify the manual section as well, or use “-a” to show all matching pages. To specify a manual section on Linux use man secNum topic (1=user commands, 5=file formats). On Solaris use man -s secNum topic, sec 4=file formats.

Use whatis intro to see a list of sections. Then read each intro. Discuss man -k topic (demo fifo) which is equivalent to the apropos command.

All man pages are divided into more or less standard sections (and possibly sub-sections). Not all man pages have the same sections, and not all man page authors use the same section names (e.g., “INTRODUCTION” vs. “BACKGROUND”, “DESCRIPTION” vs. “OPTIONS”).

The most important sections are the NAME section, which lists the command or topic and the brief description, the SYNOPSIS, which lists the legal use of a command or library function, and the DESCRIPTION (and/or Options) section. Other sections include OUTPUT, RETURN VALUES, ERRORS, USAGE, EXAMPLES, ENVIRONMENT VARIABLES, EXIT STATUS, SEE ALSO, BUGS, and AUTHOR. (Few pages will have all sections, and may use other names!)

Understanding SYNOPSIS syntax: “[]” (optional), “|” (separates mutually-exclusive choices), “...” (can repeat item on the left), and sometimes “{}” (list mutually-exclusive options, when at least one is required; this is rarely used). The synopsis syntax is described in the SUS standard. Discuss and demo these when showing the man pages listed below.

Demo man pages for who. Discuss meaning of the terms command line options, switches, arguments, and parameters. Show how to combine one-letter options. For who(1) discuss and demo: -H, -i, -Hi, ...

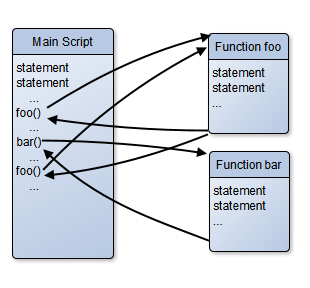

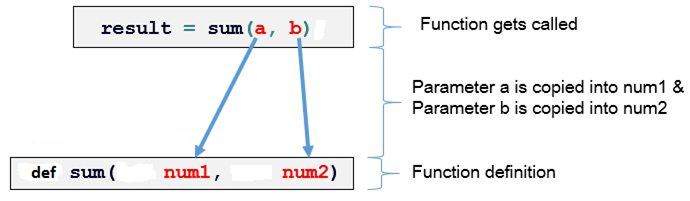

The words following the command name are called arguments or parameters. The word parameter is ambiguous because shell (or environment) variables are also known as parameters (e.g., positional parameters, parameter expansion, special parameters). According to POSIX, a variable is a parameter that uses a word for the name, not a digit or symbol. So “$foo” is a variable, and “$#” is a parameter.

Many commands take special arguments called options (or switches) that alter the command’s behavior. Options are usually a single letter each, preceded with a dash. Some commands support long option names, (usually) preceded with two dashes. Single letter options can be combined into a single word, with a single initial dash.

To keep the discussion as clear as possible I refer to the words on the command line as either options or (non-option) arguments. Usually options are listed first, followed by the arguments, which are often a list of filenames.

Some options require (or permit) an argument. Most single letter options with arguments permit a space between the option and the argument. (The space is required when the argument might be confused with additional one letter options; Is -xyz the option -x with argument yz, or the options -xy and the argument z, or three options?) With long option names, the argument must be separated with a space, or more commonly with an equals sign: --longoption=argument.

POSIX has standardized the common convention of having “--” indicate the end of options and the start of (non-option) arguments. This is useful when an argument may start with a dash. (The shell still supports the older convention uses a single dash.)

Discuss more and less commands: spacebar for next page, b for back, q to quit.) Consider using man man.

Discuss other sources of help: info (from FSF; demo info mkfifo), help (Bash only), /usr/share/doc/*, http://www.tldp.org/.

Files and Directories

Filenames (any length, any chars except “/” and null, avoid weird chars, spaces, and leading dash; note extensions not recognized by OS). .name (dot-file) is hidden.

The 8th issue of the POSIX/SUS standard will likely forbid newlines in filenames. This is because many utilities produce a list of file names, one per line (e.g., find). Also, avoid NTFS forbidden characters, and those that could confuse other commands (a colon in a filename would confuse scp while an equals sign would confuse awk, for instance).

Show hierarchy: top=“root” spelled “/”.

A filename with slashes (forward) is a pathname. The slash separates the components: directory and file names. (A filename can be thought of as a simple pathname.) Directories allow (logical) groupings of files in the hierarchy. (Show relationships: parent, child, and sibling.)

Complete (absolute) and partial (relative) pathnames (Qu: What’s the difference? Ans: starting slash). Extra slashes in pathname allowed, incl. trailing slashes for a directory. pwd, mkdir, rmdir, cd. (cd<enter> takes you home, cd - takes you back to where you just were.)

In a shell script, a common mistake is failing to check the exit status of a cd command. That is dangerous since subsequent commands that create, delete, or just list files in the current directory will be operating in the wrong one.

Qu: Must filenames be unique? Ans: only absolute pathname must be.

Working directory (aka cwd, ... spoken of as “in” some directory), home directory, root directories (per disk partition, per system (“/”), and /root home dir), the . (current dir) and .. (parent dir) directory entries.

Some directory commands: ls (-a, -d, -R), pwd, cd, mkdir, rmdir (rm -r).

Pathnames are subject to certain limits on allowed characters, length of each component, and overall length. Also there is the issue of using “..” in a pathname. Often what is needed is the canonical representation of a pathname (for portability, or to compare with another). You can use the standard pathchk [-p] utility for to make sure a pathname is valid; it returns an error message (and exit status) if there is a problem with that pathname on your system.

To convert a pathname to a canonical form, use the Gnu utility:

readlink -f bad_pathname

Or you can use a pure POSIX/SUS solution, which is much uglier:

fullname=$(cd -P -- "$(dirname --

"$file")" &&

pwd -P) && fullname=$fullname/$(basename -- "$file")

Or use this solution (which doesn’t always work, but usually will):

LINK=$(\ls -dl link)

printf '%s\n' "${LINK#*-> }"

Gnu readlink command has an option “-m” that works even when the symlink points to a non-existent file. Another useful command to know is symlinks, which will scan directories and report on all symlinks found. (Demo “cd /var; symlinks .”)

In addition to this use of dirname and basename, you can use “basename path suffix)”. This strips suffix from the filename and can be used for things such as:

c99 "$file" -o $(basename -- "$file" .c)

You can achieve a similar effect using parameter substitution: “${file%.c}”.

Lecture 3 — The vi Editor (and vim)

Because of the fundamental idea of *nix that most files contain text you will find yourself creating and editing text files often. Shell scripts too are just text files. Working efficiently with large files or many files, or quickly performing complex text processing, requires a powerful text editor.

While simple Notepad-like editors do exist (such as pico and nano), they are not efficient when working with large files or when performing complex text processing tasks. Today the standard power editor used on *nix certification exams is vi or its descendant, vim (Vi IMproved). Vi commands are used in other utilities as well, such as less.

Initially text editors were designed to work on teletypes (limited keys, rolls of paper, and no CRT). One early editor (still available) is ed. A more powerful editor was ex. Later when CRTs became available a visual mode was added to ex. You can start the editor in open (ex) mode with ex file, or in visual mode with vi file. You can then switch back and forth between these modes.

Today vim is widely used in place of vi. It is mostly compatible with vi but with many extra features. To learn vim interactively, start it with the command vimtutor. (It takes about 30 minutes to work through.)

The vim command has many names, and works differently depending on the name used to start it. In addition to vimtutor, you can also use vi, ex, view (read-only), gvim and gview (GUI), evim and eview (“easy” mode; use ^O to type a command), and rvim, rview, rgvim, rgview, revim, and review (restricted mode). There are others too, such as vimdiff. You may have to install additional packages to have all these available, but (except for the GUI mode) there are command line option equivalents.

Start vim (with and without filename(s) listed). Note on Linux, vi is a link to vim. You can list multiple files on the command line: vi foo bar boz. vim opens the first; when done you can edit the next one listed by using the :n or :wn commands. To review the filenames listed, use :args (or :arg). To start editing the listed files over again use :rew. Change the args list with :args files (can use shell wildcards).

When starting vim the named file is copied into RAM (called the buffer). As you make changes, only the buffer is changed. When you use :w[rite] command the buffer contents are written back to disk. (There is an auto-save feature too; see “autocmd”.)

Modern vim supports multiple buffers at once. You can either switch between them, or use window commands to split the window into sub-windows (panes), each a different buffer.

Vim commands come in different types. There are commands to move the cursor around the buffer, including searching commands. These are called cursor movement commands or simply movement commands. There are other commands to change settings, to copy files to/from buffers, to manage window panes, to insert, delete, or change text, and commands to format the text in various ways. There are also some miscellaneous commands that don’t fit neatly into any category (such as undo).

The editor has modes. In command mode (or normal mode), the keystrokes you type mean commands. In other modes such as insert mode your typing is considered data to add to the buffer. There is also a replace mode similar to insert mode but using type-over to replace rather than insert text. Vim actually has about a dozen modes, and the same keystroke may have different meanings depending on the current mode. (Note this use of mode is unrelated to the earlier use of open mode and visual mode.)

You hit the escape key to switch from any mode to command mode (and to cancel a partially entered command).

You enter replace mode with “R” or “[num]s” (num characters will be replaced—substituted—with what you type).

You enter insert mode with any of the following: a, A (append after current cursor/end of line), i, I (insert in front of current cursor/beginning of line), o, or O (after or before current line—opens a line).

Although there are GUI versions of vim with menus for the commands (including for Windows) all commands can be typed in. Many are a single letter but some are longer sequences. As the original vi used nearly every letter and symbol for commands, the many new ones introduced in vim generally (but not always) are two letter sequences.

Some commands require you to enter line ranges or filenames or other stuff. Generally such commands start with a colon (“:”) which causes the cursor to jump to the last line of the window. These commands don’t take effect until you hit the enter key. (This is sometimes called last line mode but it is really just part of command mode. Such commands are just the older ex open mode commands.)

Cursor Movement and jumping commands

The most basic movement commands are the arrow keys. Touch-typists generally prefer the h, j, k, and l (left, down, up, right) commands since you don’t have to move your hands. Also space, enter (and +), - (minus), and backspace keys move the cursor. Other Cursor movement commands: w, W (forward one word), b, B (back one word), e, E (end of word), ge (end of previous word), ) and ( for next/previous sentence (any [.!?][])}]*[[:space:]] ends a sentence), } and { for next/previous paragraph (a blank line separates paragraphs).

All these movement command can be preceded with a number, to repeat. So “3w” means to move three words forward.

Other movement commands move the cursor some place specific, so should not be preceded with a number: 0, $ (beg/end of line; [HOME] and [END] keys usually work for this too), [num]G (go to line num or last line), 1G (same as gg), H, M, L, num| (go to column num), % (matching parenthesis).

You can set bookmarks with mchar and later jump to a mark with `char and 'char (jumps to beginning of marked line). Use :marks to view bookmark list.

A jump is one of the following commands: ', `, G, /, ?, n, N, %, (, ), [[, ]], {, }, :s, :tag, L, M, H, and the commands that start editing a new file. If you make the cursor “jump” with one of these commands, the position of the cursor before the jump is remembered in a list. You can return to a previous position with the '' or `` command, unless the line containing that position was changed or deleted. CTL+O moves the cursor to a previous jump position in the jump list, tab (CTL+I) to the next position in the jump list. Show the list with :jumps (see also :changes).

^] and ^T (jump to /return from tag under cursor). A separate tags file is used to list identifiers and their file/line/column. This file can be generated automatically for a directory of programs in a variety of languages using the *nix command ctags (or Gnu Global, or gtags), or you can create it manually (vi/vim can’t create the tags file, but it has a simple format). Note the vim help feature uses tags. You can jump to any tag using :tag name, and view a list of known tags with :tags.

Searching

Searching with /RE,

?RE (reverse

search), n (repeat last search same direction), N (repeat last search in the opposite direction).

fchar, tchar

(jump (almost) to char on current line), ; (repeat), , (repeat

backwards). A * searches for the word under the cursor.

Data Changing Commands

Many vi/vim commands follow this pattern: [count]operator[count]movement. For example d) means delete to end of sentence, and >} means indent to the end of the paragraph. Repeating the command letter is a shortcut to specify the whole line as the movement, so “yy” copies the current line, and “d5d” deletes five lines.

The following operators are available (M means [count]motion):

["R]cM change (copy, then replace)

["R]dM cut (delete)

["R]yM copy (yank) into register (does not change the text)

["R]p paste (also P to paste in front)

~ toggle case of current char (and advance cursor 1 char)

g~M toggle case

guM make lowercase gUM make uppercase

!Mcmd filter (and replace) through an external program

gqM text formatting (re-format selected text according to formatoptions)

g?M ROT13 encoding

>M shift right <M shift left

If the motion includes a count and the operator also had a count before it, the two counts are multiplied. For example: “2d3w”, “3d2w”, “6dw”, and “d6w” all delete six words.

When changing data, you can specify (in vim, not vi) a text selection, not just a motion. This selections include aw (a word), as (a sentence), ap (a paragraph), and iw, is, and ip. These select the whole word/sentence/paragraph the cursor is in; with aw/as/ap, the trailing white space is included in the selection. With iw, is, or ip, only the inner word/sentence/paragraph is selected. So if the cursor is on the h of line “this is easy” and you type “ciwthat<ESC>”, the line becomes “that is easy”.

When text is cut or copied, it is saved in a register (a clipboard). The last 10 items are saved in registers "0 through "9. You can also put stuff into named registers (one-character names only), by prefacing the command with “"register”. For example, “"adas” will cut the current sentence into regisiter a. Use :reg to view the contents of the registers.

Using ranges

A range is a list of lines to apply a command to. Only commands that start with a colon (“Ex” commands) can use a range, which may be zero addresses (defaults to current line), one address (just that line), or address,address (all lines between the two addresses, inclusive). An address is a line number, “.” (the current line), “$” (the last line), “address+num” or “+num”, “address-num” or “-num”, or “/RE/” (all lines that match the RE). A “%” is a short-hand for “1,$”. When using + or - (an offset), num defaults to 1 if omitted, and address defaults to “.”.

You can also use 'mark for the line specified by the bookmark. More complex examples: “/RE1/,$” and “/RE1/+1,/RE2/-1”.

Many colon commands can use a range:

:range d (deletes (cuts) whole lines specified in the range; ex: :1,10d or :.,$d for current line to the end). (See below for more complex cases.)

:range s/RE/replacement/flags — search and replace. By default only the first match per line is replaced; use “g” flag to replace all matches, and “c” to confirm each change. “e” means to ignore errors (useful in more complex cmds.)

REs (discussed in detail later): \< and \> (start/end of word), [^list] (any char not in list), \s and \S (white-space and non-white-space char), \e (escape), \t (tab), \r (return) \n (newline), \b (backspace).

:range g/RE/cmd — Run the vi/vim cmd only on the lines in range which match the RE. (Also :g!/RE/cmd to run on lines that don’t match RE.) Some examples include:

:.,.+9g/foo/d Delete

lines containing “foo” with 10 line range

:.,.+9g/foo/s/x/y

Change x to y on lines containing foo, in

range

:.,.+9g/^/exe

"normal! 10\<C-A>" Add 10

to the first number on each line, in range. (This is how you can execute

normal (non-colon) commands with :g. In this

case, “10ctrl-A” to add 10 to the first number on the line, if any. CTRL-X

decrements.) Note that numbers with leading zeros are treated as octal by

default; change the nrformats setting to empty (“:set nrformats=”) to stop that.

:range w [file] (save range lines (to file))

:range y [register] (save range lines (to register))

:line p [register] (put/paste lines (from register) after line)

:range t [address] (copy range lines below address)

:range m [address] (move range lines below address)

:range j (join range lines into a single line)

:range norm commands (run the

Normal mode commands on each line),

for example: .,.+4norm

gUU

:range ce (center), :range ri (right align), :range le (left align)

:range co address (copy range lines

below line address, often “.”)

:range mo address (move range

lines below line address)

Using argdo and colon command shortcuts

:argdo %s/\<foo\>/bar/ge | update

argdo runs commands (separated with pipe) on all files in the args list. Here, all (“g” flag) words “foo” will be replaced with “bar” in every file in the args list. If any changes are made the files get saved (”update”). See also :windo and :bufdo.

On the Ex (colon) mode line, you can scroll through the Ex command history using up and down arrows. TAB auto complete works as in DOS (cycle through each possible match); ^D shows a list of possible matches. “:partailCmd<Up>” scrolls through the history, only showing possible matches.

Other vi/vim commands

Use :help and :options. ^Wq closes current window (so does :q and :close), ^Ww (or ^w^w) moves to next window, :Ws (or :split) to split current window into two. :new or :Wn for new window.

^e and ^y (scroll up/down 1 line), ^u and ^d (scroll up/down 1/2 page)

zenter, z-, and z. (or zz) means to scroll current line to top/bottom/middle

^ONormalModeCommand (From input mode, run one command; example: “^Ozz” will center the current line in the window.)

K (lookup word under cursor in man pages, handy when scripting)

* (search for the next occurence of the word under the cursor)

^g (show status)

^r register (in input mode or with a “:” cmd, pastes the contents of register)

ga (show ASCII for char under cursor)

Many other vim commands start with g. To list them, try :help g

^Vchar means to insert char literally (try inserting tab, newline, or control chars)

^L (redraw screen)

. (a period) means to repeat last change. (Common use: n.n.n. ...)

Note, using dot is a good reason to plan your changes, so repeating the last change does what you want. Also, note that using cursor movement while in input mode ends one change and starts another.

@: means to repeat the last Ex (colon) command, on the current line.

:cmd1 | cmd2 means to run the Ex cmd1, then run cmd2.

:e file means to edit file instead.

:!cmd means to run external cmd. (See also !Mcmd, above.) In the command, you can use “%” to mean the current filename.)

:!! means to repeat the last external cmd.

:read arg (paste from arg, arg = a filename or !command)

:ab (abbreviations) save typing by allowing you to define a short word to expand to a longer phrase. While this can be done in any mode, use :iab word phrase so word only expands in input mode (and :cab to cmd mode only). Example:

:iab SA system administrator

:map is similar to :ab but instead of a text phrase you can define any sequence of vim commands. :imap and :cmap for input mode/cmd mode. There are cmds to list abbreviations and maps (:ab and :map), to clear them, etc.

:map word list-of-cmds Defines a macro, for example:

:map <F2> GoDate: <Esc>:read

!date<CR>kJ

:iab ,t <table border=0 cellpadding=4>

:imap \p <Esc>o\<P>\</P><Esc>3hi

(Notice how function keys, enter key, and the escape key are entered as a sequence of 4, 4, and 5 characters). You can map any keys or sequence of characters. If that is already used the old function can’t be used. Note F1 is already used. Often backslash-char is used, or comma-char. Much more complex macros are possible.

ga shows the numeric value of the character under the cursor. This can be useful to convert, for example, curly quotes, non-breaking spaces, or em-dashes to ASCII equivalents. For example, run “LC_ALL=POSIX vim file”, then search for “/[^{:print:]]” to find a non-ASCII character. Type “ga” to get the Unicode value, say 201c (left curly quote). Now do “:%s/\%U201c/"/g” to convert them all to straight quotes. Repeat for other non-ASCII characters. (You might use recode or iconv instead, if you know the encoding used, to convert to ASCII.)

Some settings: on/off is :set name/noname, others are :set name=value. (:if too.) Vim-only settings without “set”. “:se all” to view. Some settings:

set softtabstop=4 " sets soft tab stops every 4 columns

set shiftwidth=4 " hitting tab indents 4 columns

set backspace=indent,eol,start " allow backspacing over everything

set expandtab " convert all tabs to spaces

set autoindent " indent new line to same as previous

set background=dark " use a color scheme good for black background

set laststatus=2 " always show status bar

set ruler " show cursor position in status bar

set showcmd " shows partial commands (e.g., "dd") in status bar

set ignorecase " ignore case when searching

set nohlsearch " don't highlight search matches

set incsearch " use incremental searching

set syntax off " don't use color at all

set fileformat=dos (or unix) " or set ff=dos or unix

set list " show non-printing chars and EOL (as $)

set history=100 " save 100 previous vim commands, default is 20

set textwidth=72 " Wrap at col 72; don’t use wrapmargin in vim

Mention spell, ispell file (aspell check file), fmt. Mention PuTTY keypad mappings, if you have problems using the keypad or other special keys. Mention vi -x (or “:X”) to encrypt, “:set key=” to remove.

Lecture 4 — Shell Features, I18N, Working with Processes

Wildcards a.k.a. globbing, filename expansion and completion: “*” (match anything, including nothing at all), “?” (match any one character), character class or range or list: [list], [!list], []xyz], [!]xyz-]. (Some commands (e.g. rsync) and shells (e.g., zsh, bash4 with shopt -s globstar) use “**” to match slashes too, so that “**/*.txt” would find all files ending in .txt in this and all subdirectories, recursively.)

The term “glob” comes from a Unix v1 command of that name, apparently short for “global”, that expanded wildcards. Later Unix systems had this functionality built into the shell. See man page for glob(7).

If the locale is POSIX, you can use ranges: [0-9], [a-z], [A-Z]. Note “[z-a]” and “[a-Z]” are not legal, but “[a-z0-9]” is fine.

Characters on a command line can be quoted, to force the shell to treat them as plain, non-special characters. These include the glob characters and others such as the newline or semicolon (“;”) that separate commands.

Normal ranges don’t always work! (Bash example: [!A-Z]* with LC_COLLATE=en_US). POSIX doesn’t actually require support for these, due to support of non-ASCII text. These are only guaranteed to work if the locale is set to POSIX (a.k.a.”C”). (For more info, see locales below.)

POSIX Character Classes

For non-POSIX locales, you should use POSIX pre-defined character classes such as “[:digit:]” instead of ranges. (Discussed with regular expressions, below.) So instead of “[!A-Z]” use “[![:upper:]]”. Other examples: [[:digit:][:lower:]$_]. Not all shells support POSIX character classes (but then ranges should work regardless of locale).

Locales: A locale is a definition of language (and encoding, e.g. UTF-8), time, currency, and other number formats, that vary by language and geographical region. Related formats are grouped into categories. *nix systems include a number of environment variables (one per category) you can use to pick these data formats, by specifying a locale for each.

The settings in a locale reflect a language’s and geographic region’s (i.e., country’s or territory’s) cultural rules for formatting data. A locale name looks like lang[_region][.encoding][@variant]. For example “en_US.utf8”. The encoding determines what bytes or byte sequences are valid; on *nix this is also known as a charmap or charset. The charmap also defines names for every valid character.

Only lang is required. “POSIX” (or “C”) locales are always defined but others may or may not be defined on any given system. A locale can also be an absolute pathname to a file produced by the localedef utility.

The POSIX categories and the environment variables for each are:

LC_CTYPE Character classification (letters, digits, ...) and case conversion.

LC_COLLATE Collation (sorting) order.

LC_MONETARY Monetary formatting.

LC_NUMERIC Numeric, non-monetary formatting.

LC_TIME Date and time formats (but not time zones).

LC_MESSAGES Formats of informative and diagnostic messages and interactive responses. (Related to NLSPATH.)

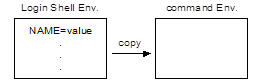

(Additional categories such as LC_ADDRESS or LC_PAPER may be available on some systems.) If some LC_* variable is not set the value of LANG is used to define its locale. If LC_ALL is set, that value over-rides any other LC_* and LANG settings.

Some systems set default locale settings in /etc/*/i18n (older) or /etc/locale.conf (newer), and ~/.i18n.

To portably set your locale, it is best to set the LC_ALL environment variable to C (or POSIX). Setting only (for example) LC_COLLATE has two problems: it is ineffective if LC_ALL is also set, and it has undefined behavior if LC_CTYPE (or LANG if LC_CTYPE is unset) is set to an incompatible value. For example, you get undefined behavior if LC_CTYPE is “ja_JP.PCK” and LC_COLLATE is “en_US.UTF-8”.

Most shell scripts probably should set LC_ALL to POSIX at the top of the script. (You may want to set TZ to UTC0 as well, especially for utilities that record a date in the current time zone, such as diff and tar.) Using the POSIX locale can import performance (otherwise many utils will treat your text as Unicode and not ASCII), and gives consistent and reliable output from utils such as sort.

The standard utility iconv can be used to convert between (compatible) text encodings. Use iconv -l to list all available encodings on your system. (The non-standard convmv command can be used to convert filenames to UTF-8 or another encoding.) Use the -l option for a list of supported codesets/codemaps.

For example, suppose you have an ISO-8859-1 text file, and you want to grep it for some Unicode string. If your string is just ASCII there is no problem. Otherwise, you need something such as this:

iconv -f ISO-8859-1 -t UTF-8 file |grep 'whatéver'

Other than POSIX (“C”), there are no standard locales. Errors result if you set the locale to one that is not available on the current system. (The locale utility can generate a list for any system.) The various locales and charmaps are stored in an implementation-defined directory (or directories); for Linux, this is usually /usr/share/i18n/{locales,charmaps}.

Also, it was discovered in 3/2013 that POSIX locale does not require single-byte encodings (such as ASCII or ISO-8859-1). This was a surprise to the standards group, and work is underway to address that issue.

Gnu recode is non-standard, but may be more powerful and support more types of conversions than iconv.

Encoding of text is a problem since Unicode replaced ASCII: each character still has a number that defines it (a code point), but the range of numbers is 0 to over 100,000. The encoding is the representation of that number as one or more bytes in some sequence. Every text file is encoded, but unfortunately there is no way to determine exactly which encoding was used. If you guess incorrectly, the data will appear corrupted.

There is a non-standard utility encguess that may be able to guess the encoding used for a file, or you can try “file -kr --mime-encoding file”. If you have to guess, I suggest guessing UTF-8. I further suggest you only encode your files as UTF-8.

Linux (at least) has issues with Unicode normalization: it doesn’t handle combining characters. ICU is a popular FLOSS library and set of utilities for working with Unicode. On Linux, you can find and install a package for this (Fedora: “yum install icu”), and use the uconv utility to handle all your Unicode translation/normalization needs. For example:

$ printf 'Ste\u0301phane+Chazelas\x80\n' |

uconv -i -f utf-8 -t utf-8 -x Any-NFKC

Stéphane+Chazelas

(That might not look right unless your locale (LANG) setting is UTF-8 and your terminal emulator correctly works with UTF-8 (PuTTY does).

PHP7 and

newer support ICU:

$ php -r "echo IntlChar::charName('@');" # shows “COMMERCIAL AT”

The following example from the Fedora locale(1) man page compiles a custom locale from the ./wrk directory with the localedef(1) utility under the $HOME/.locale directory, then tests the result with the date(1) command, and then sets the environment variables LOCPATH and LANG in the shell profile file so that the custom locale will be used in the subsequent user sessions:

$ mkdir -p

$HOME/.locale

$ I18NPATH=./wrk/ localedef -f UTF-8 -i fi_SE $HOME/.locale/fi_SE.UTF-8

$ LOCPATH=$HOME/.locale LC_ALL=fi_SE.UTF-8 date

$ echo "export LOCPATH=\$HOME/.locale" >> $HOME/.bashrc

$ echo "export LANG=fi_SE.UTF-8" >> $HOME/.bashrc

I/O Redirection

Most commands (and all filter commands) are not written to read directly from the keyboard. Instead, they are written to read from standard input (“stdin”) and send output to standard output (“stdout”). It is up to the shell to set these when it starts a new command. Where these are connected to is part of the environment (and is exported). By default, these are connected to the keyboard and screen (window). When using redirection characters, the shell will hook these up differently.

Use a pipe (“|”) when connecting the output of one command to the input of another. But what happens if instead of “cmd1|cmd2” you use “cmd1|file”?

It is possible to connect the input or output of a command to (or from) a file instead. In that case never use a pipe, use these instead: < (input), >, >> (append).

Discuss what happens when using > and the named file exists: clobber. Show set -o noclobber (“>|” to over-ride noclobber). Note that the shell processes redirections before running the command, so the clobber happens even if the command never produces any output.

Discuss the problem with error messages and prompts: they should be seen on the screen and not vanish into a file or pipeline. So another output stream was added: standard error (“stderr”). A well-written command will use stderr for error messages and prompts. But you can redirect stderr as well. (Qu: why would you want to? Ans: you start a lengthy job and go out to lunch, or there are so many error messages they scroll off the screen, or the error messages aren’t important and you don’t wish to be bothered with them.)

Internally the various streams are numbered: 0=stdin, 1=stdout, and 2=stderr. (You can define others too.)

The redirection characters can be preceded with a number (unquoted) indicating which stream. The default is stdin (or zero) for “<” and stdout (or one) for “>”. To redirect stderr you must use “2>” or “2>>”.

The word following the redirection symbol is subject to various expansions. (Pathname expansion, a.k.a. wildcards, are only done if the result would be a single word.)

Redirections can be before, after, or in the middle of simple commands. But they must be on the same line as the terminator for complex commands such as command groups (“}”), if statements (“fi”), etc.

When redirection is

mixed with pipelines, the pipeline redirection is processed first. So: command1 >file | command2

will send all output of command1 to file and not through

the pipe.

(Demo: who -e > who.out, who -e 2>who.out, who 2>who.out)

Discuss why “cmd >foo 2>foo” doesn’t work correctly (due to buffering issues) so use 2>&1 instead.

Redirection as described here also works on the Windows command line.

In a shell script, you may want to send output to stderr: echo msg >&2. For security applications, you can use /dev/tty (your console: keyboard and screen or window).

You can redirect to/from /dev/null too (which is a trash can; anything sent is tossed, and is a zero-length file if you try to read from it).

Using “;” as a pipeline terminator (that’s what newline is), you can enter multiple commands on a single line. In this case, the shell will run the first command, and when it finishes run the next.

You can also terminate a command with “&”. In this case, the shell runs the first command in the background and without waiting starts the next: cmd & cmd &.

Pipelines and redirections can cause otherwise built-in commands to run in sub-shells. Not all shells do this, but they can. This can lead to surprising results in cases such as read var when you expect var to be set in the current environment.

Why use redirections when you could pass filenames to commands? For example, consider “awk 'script' infile >outfile” versus “awk 'script' <infile >outfile”:

· Consistent error messages when filename can’t be opened.

· Command is not even started if infile can’t be opened.

· No clobbering of outfile if infile can’t be opened.

· Filenames are less restricted. (For example, filenames containing “=” would confuse awk, as would a filename of “-”.

· Some commands alter their output format when using input redirection as opposed to filenames passed as arguments (e.g., the wc command).

(Even when using redirection, the names of files could be passed as additional command line arguments, or (even safer) as environment variables.)

Of course, there are disadvantages as well (at least with awk):

· FILENAME variable is not populated.

· ARGV[] doesn’t contain the file names.

· Not extensible to running the script on multiple files.

· Not extensible to running the script on a file plus a pipe.

· Not extensible to some scripts using getline.

· Not extensible to re-reading the input file.

here Documents

Here documents (also called here docs) allow a type of input

redirection from some following text. This is often used to embed a short

document (such as help text) within a shell script. Using a here doc is

often easier and simpler than a series of echo or printf

statements. It can also be used to create shell archives (shar

files), embed an FTP (or other) script that needs to be fed to some command

(such as ftp), and a few other uses. Here docs are so useful

many other languages use them, such as Perl and Windows PowerShell.

The syntax is

simple. Use <<word on the command line. Then all following lines

are collected by the shell (and treated as one very big word), up to a line

that contains the word on a line by itself. (No leading or

trailing spaces or tabs allowed!) That word is fed into the command’s

standard input. Here’s an example:

cat <<EOF

This is a here document. All lines following

the command, up to a line containing the word

(in this case, "EOF") are collected and sent

into the command's standard input.

EOF

The word used as a delimiter can be anything; EOF or END work well and are common, as is “!”. If quoted, the delimiter word can contain white space, as shown here:

cat

<<' END' # starts with 3 spaces

Hello

END

Note the delimiter word can appear inside the here doc, just not on a line by itself. It is possible to have multiple here docs on a single command, but this is rarely useful. The command’s input is redirected from each here doc in order.

Using <<-word (a leading dash) will strip leading tabs (but not

spaces). (You can enter a tab by typing control-v then TAB.) This

feature was intended to allow you to indent here doc bodies to look nicer in a

shell script, but problems with tabs (for example, automatic conversion to

spaces) mean you’re better off not using this feature. Instead, you can use sed instead of cat, and a

script to remove leading spaces:

sed

's/^[[:space:]]*//' <<EOF

... (note leading spaces/tabs)

EOF

A pure shell solution is also possible:

while

read -r line; do

printf "%s\n" "$line"

done <<EOF

...

EOF

The body of a here doc is

expanded using shell’s parameter expansions, command substitutions, and

arithmetic expansions. A backslash inside of a here doc behaves as if it

were inside of double quotes; single and double quotes are just regular

characters (they don’t quote anything) in a here doc. However, inside of

an inner command ($(...)) or parameter expansion (${...}),

a double quote keeps its normal shell meaning. Also, “\"” gives those two characters. For example:

foo=bar

cat <<EOF

\$foo = "$foo",

but * (or another wild-card) is just a '*'.

Today is $(date "+%A").

EOF

Quoting of any part of word

turns off these substitutions on the here document body. The type of

quoting doesn’t matter: 'X', "X", or \X. For example:

foo=bar

cat <<\EOF

\$foo = "$foo",

but * (or another wild-card) is just a '*'.

Today is $(date "+%A").

EOF

See also Here-Document description in the POSIX/SUS standard.

Demo by adding -h (help) arg to nuser command).

Another interesting use of a here document, is to run a bunch of commands remotely:

ssh

remoteHost << EOF

cmd1

cmd2

...

EOF

(If you quote EOF, you can use environment variables (and wildcards) in the here document that won’t be expanded locally.)

Modern Korn shell supports another redirection operator, which permits filter commands to replace a file with the output of the command, so-called “in-place” editing. With “>;”, you can use:

sed -e s/foo/bar/ file >; file

to do in place editing with sed. The >; operator generates the output in a temporary file and moves the file to the original file only if the command terminates with a 0 exit status.

There is also a “<<<word” in Bash and some other shells, a here-string. Not (yet) port of POSIX. The word becomes the input to the command, after expansions. For example, “grep foo <<<"$BAR"” will search the contents of the variable $BAR for foo.

Another trick for here documents is to comment out a block of statements in a script, like so:

:||: << '#Comment End'

... commented-out stuff ...

#Comment End

(The seemingly unnecessary “||:” prevents the shell from even trying to create a here document.) You can simply comment-out the first line to re-enable the block. Of course, this technique is only useful for large scripts.

Processes

Qu: What is a process? Ans: A running program, in RAM only, own environment. Foreground (the process with the focus) vs. background, start in background with &. Under Unix one process (the parent) creates another (the child) using a syscall named fork (hence the phrase forking a process) to create a duplicate process (differs only in the PID and PPID; some other settings and data are not copied into the new process from the original, e.g. non-exported environment settings).

Modern systems use a process as a container of “threads”. It is threads that have PIDs (since threads can share the same PID, but usually don’t, there is also a TID that is unique. Use “ps -fL” to see the TIDs (in the column named LWP for historical reasons) and the count of threads in that process (the NLWP col).

The fork is followed by an if statement to check the PID to see if this is the parent or child process (remember both will do this). The child then uses the exec operation (actually there are many slightly different exec syscalls) to replace the code with new code, from some other executable program on the disk. Most of the environment and data of the process is unchanged by exec.

ps [start with no options, then describe the kernel’s process table (in RAM only)]. Then -f, then l, then -ef. (ATT -ef, BSD axl, -w = fuller cmd line, see my pps alias). Discuss PID, PPID, time (CPU, not real time), important/standard processes (init, no PPID, PID=1), lpd, xinetd, sendmail, ...). Note in ps output, [cmd] means cmd is swapped to disk on some system, kernel process on Linux and others. (ps -fC command shows all processes named command.)

Show pstree (ptree on Solaris), top command, w command. Other p-commands include pgrep and pkill.

Due to a quirk in POSIX, all standard utilities must be usable with exec. This means you’ll find useless versions of shell built-in utilities on some systems, such as /usr/bin/cd or /bin/read (which have no effect unless run from the current shell process). Personally, I would have been happier with an error message in these cases!

Processes are organized into process groups. When you use the term job, you are talking about a process group. There are foreground/background process groups, not processes. When you run a command line from the shell prompt, or a pipeline, the command/pipeline is put into a new process group.

Process groups have a process group leader, typically the first process added to the group. (When a new process is created via fork, it inherits the PGID of its parent.) All processes keep track of their process group ID (PGID), which is generally the PID of the process group leader. (ps has options to show the PGID.)

To facilitate interactive job control, POSIX has a concept of sessions. Sessions are collections of process groups connected to the same controlling terminal. The TTY shown in ps output is the controlling terminal for the session. Each session may have at most one controlling terminal associated with it, and a controlling terminal is associated with exactly one session. However, not all process groups are in any session, and thus don’t have a controlling terminal. (Shows as a “?” in the ps output.) Linux has setsid program args to run some program in a separate session.

Certain input sequences (control-something) from the controlling terminal cause signals to be sent to all processes in the foreground process group associated with that controlling terminal.

It is easy to send a signal to all processes in a given group, or all processes in a given session, at once. (The kill utility syntax for this will be discussed later.)

You can use job control to manage jobs in an interactive session: review fg, bg, jobs, ^Z, and the extensions to kill (%jobnum).

Process Priority

Different systems uses diff schemes but common to all is that a higher number means a worse priority (except for modern Solaris), even low priority jobs get some time-slices, and priority worsens over time. SysV priority scheme: 60 + (CPU time/2) + (nice -20). Note it is threads (in Linux, tasks) that are scheduled, not processes.

On modern systems the priority reported by ps -l is only a rough estimate of the true priority. The reason is that modern systems use sophisticated scheduler algorithms that can’t be reduced to a single priority number. For example, on Linux (and other OSes) there are several schedulers you can use. For the default one, time-slices and traditional priorities are replaced by assigning a scheduler class and some amount of time quanta (measured in nanoseconds, not milliseconds). When the quanta is used up, the scheduler reassesses the process and changes its class and also assigns more (or less) time than initially. Then while running, processes have a dynamic priority that can increase or decrease temporarily under certain conditions.

nice

This ranges from -19 to +20 (or 0 to 39). This works similarly to nohup; a shell is started that runs the command, and the priority is inherited. (Often use nice nohup command &.) Only root can use negative values and thus improve priority. Default nice value is 10. Can change the priority of existing processes using renice val -p PID.

Linux also has an ionice command to reduce disk scheduling priority. See also chrt and taskset (all non-standard).

Zombies

A terminated process is called defunct or a zombie. It is usually quickly reaped by its parent via the wait(2) (or similar) system call, which removes the process table entry. If the parent process terminates then any children (live, or defunct but not reaped) are adopted by init, which on all modern systems will reap them immediately or as soon as the live processes terminate. Occasionally a zombie won’t get reaped for a very long time (usually the result of poorly written software) and will appear in ps output.

time

You can time a command with the shell’s built-in time command. There is a POSIX standard format for the output, which you can usually force with an option. There is also a /usr/bin/time command (a Gnu version), with a “-p” option for POSIX output, and a “-v” option for verbose output (show).

The times reported are real or elapsed (process end time minus start time), sys (time spent running kernel code, i.e. system calls), and user (time spent running user code).

On a system running more than one process and that has several CPUs, the real time reported has little correlation with the number of CPU cycles that are needed to execute the corresponding code. You have to consider the time used by other processes, the time waiting for resources, and the fact that several processors might run concurrently to perform the task. All you are guaranteed is that:

real >= (user + sys) / num_cpus

Most OSes will try to run one process/thread on one CPU, to take advantage of that CPU’s cache. On Linux you can control this with the taskset command.

Lecture 5 — Regular Expressions and some filter commands

Regular Expressions

Regular expressions (or REs) are a way to specify concisely a group of text strings. The Unix editor ed was about the first (Unix) program to provides REs. Many later commands used this form of RE and their man pages would “see also ed(1)”. Over time, folks wanted more expressive REs, and new features were added. The ed REs became known as basic REs or BREs, and the others became known as extended REs or EREs.

Suppose you needed to find a specific IPv4 address in the files under /etc? This is easy to do; you just specify the IP address as a string and do a search. But, what if you didn’t know in advance which IP address you were looking for, only that you wanted to see all IP addresses in those files?

Even if you could, you wouldn’t want to specify every possible IP address to some searching tool! You need a way to specify all IP addresses in a compact form. That is, you want to tell your searching tool to show anything that matches number.number.number.number.

This is the sort of task we use REs for. You can specify a pattern (RE) for phone numbers, dates, credit-card numbers, email addresses, URLs, and so on.

The Good Enough Principle

With REs the concept of “good enough” applies. Consider the pattern used above for IP addresses. It will match any valid IP address, but also strings that look like 7654321.300.0.777 or 5.3.8.12.9.6 (possibly an SNMP OID).

To match only valid IPv4 addresses is possible, but rarely worth the effort. It is unlikely your search of /etc files will find such strings, and if a few turn up you can easily eye-ball them to determine if they are valid IP addresses.

It is possible to craft a more precise RE but in real life, you only need an RE good enough for your purpose at hand. If a few extra matches are caught you can usually deal with them. (Of course, if making global search and replace commands, you will need to be more precise!)

An RE is a pattern, or template, against which strings can be matched. Either strings match the pattern or they don’t. If they do, parts of the matching string can be saved in named variables (sometimes called registers), which can be used later to either match more text, or to transform the matching string.

Pattern matching for text turns out to be one of the most useful and common operations to perform on files. Over the years, a large number of tools have been created that use REs, including all text editors, grep, sed, sort, and others. The shell wildcards can be considered a type of RE.

While the idea of REs is standard, different tools may use slightly different syntax. Some of these tools also contain extensions that may be useful. Perl’s REs are about the most complex and useful dialect, and are sometimes referred to as PREs or PCREs (Perl compatible Regular Expressions). (See man pages for perlre(1), also perlrequick(1), perlretut(1), pcrepattern(3), and pcresyntax(3).) Java also supports a rich syntax for REs (Java REs).

Sadly, not all utilities that provided REs used the same symbols. Eventually POSIX stepped in and standardized REs, mostly compatible with the original ed REs but with many additions. (POSIX uses the acronyms BRE and ERE, but also uses the terms “obsolete” REs and “modern” REs). Some older tools changed to use the new syntax. See regex(7) for details.

Most RE dialects work this way: some text (usually one line) is read. Next, the RE is matched against it. In a programming environment such as Perl or an editor (sed), if the RE matches than some additional steps (such as modification of the line) may be done. With a tool such as grep, a matching line is just printed. Finally, the cycle repeats.

Top-down explanation (from regex(7) man page):

An RE is one or more branches separated with “|” and matches text if any of the branches match the text. A branch is one or pieces concatenated together, and matches if the 1st piece matches, then the next matches from the end of the first match, until all pieces have matched. A piece is an atom optionally followed by a modifier: “*”, “+”, “?”, or a bound. An atom is a single character RE or “(RE)”.

Show Regular Expression Web resource. The following shows POSIX REs, both BREs and EREs. The syntax that is the same for both is:

any char matches that char.

. (a dot) matches (almost) any one character. It may or may not match EOL, depending on options set. Also, it won’t match invalid multibyte sequences. (So, dot works best in POSIX locale.)

\char matches char literally if char is a meta-char (such as “.”). Never end a RE with a single “\”.

[list] called a character class, matches any one character in the list. Can use a range such as a-z, if LC_COLLATE=C.

[^list] any character not in list (shell wildcard (globbing) uses [!list]).

To include a literal ] in the list, make it the first character (following a possible ^). To include a literal -, make it the first or last character. Note other metacharacters except \ lose their meaning in the list. (So you don’t need a backslash for a dot or open brace, “[].[-]”.) List can include one or more predefined lists, or character classes. In some RE dialects, a backslash+character (e.g., “\d”) denotes a predefined list (in this case, all digits).

In POSIX (and Bash), character classes are: [:name:], where name is one of: alnum, digit, punct, alpha, graph (same as print, except space), space (any white-space), blank (space or tab only), lower, upper, cntrl, print (any printable char), or xdigit.

Concatenated REs Match a string of text that matches each RE in turn.

^RE an RE anchored to the beginning of the whole string.

RE$ an RE anchored to the end of the whole string.

RE* zero or more of RE.

The following two forms are for EREs; use “\{“ and “\}” for BREs:

RE{min,max} max (but not the comma) can be omitted for infinity.

RE{count} Exactly count of RE.

Both EREs and BREs support grouping with parenthesis (with backslashes for BREs). However, only BREs support back-references (see below) that can be used in the matching text, saving the matched text in a numbered register:

(RE) A grouped RE, matches RE. Each group is remembered in a numbered register, counting open parenthesis. For example (BRE): “\([0-9]*\)\1” matches “123123”.

Finally, only ERE’s support the following syntax:

RE+ one or more of RE. (Same as BRE “RE RE*”.)

RE? zero or one of RE. (Same as BRE “RE\{0,1\}”.)

RE1|RE2 either match RE1 or match RE2. (This has no BRE equivalent.)

Word Boundaries POSIX doesn’t have word delimiters, which match the null string at the beginning and/or end of a word. But most RE dialects use “\<” and “\>”, or Perl’s “\b”. (Gnu utilities mostly uses “\b”; Gnu sed uses “\y”.)

Escapes Special (or meta-) characters lose their meaning if escaped, that is preceded with a backslash (“\”) character. Some (in POSIX, all) others lose their special meaning when used in a character class, such as “*” and “.”. In some dialects, meta-characters only have special meaning when escaped! (Mostly true for BREs.)

In POSIX EREs, a “\” followed by one of the characters “^.[$()|*+?{\” matches that character taken as an ordinary character. A “\” followed by any other character matches that character taken as an ordinary character, as if the “\” had not been present (but not all dialects work this way, so don’t try it!).

Some characters used to express REs are only special if they appear in a specific context. For instance, the “^” is special only if it is the first character of some BRE, the “$” only if the last. The “*” is not special if the first character in an RE. A “{“ followed by a character other than a digit is not the beginning of a bound. A backslash is always special, so it is illegal to end an RE with one.

POSIX and other RE dialects have different rules for when special characters need to be escaped. For example, a “^” is only special as the first character in a BRE, and doesn’t need escaping otherwise. The same holds for a “$”. But you must always escape these characters in EREs:

$ echo 'a^b' | grep 'a^b' # matches

since BRE

$ echo 'a^b' | grep -E 'a^b' #

doesn’t match; since ERE

Precedence Rules

1. Repetition (“*”and “+”) takes precedence over concatenation, which in turn takes precedence over alternation (“|”). A whole sub-expression may be enclosed in parentheses to override these precedence rules.

2. In the event that a given RE could match more than one substring of a given string, the RE matches the one starting earliest in the string.

3. If an RE could match more than one substring starting at the same point, it matches the longest. (This is often called greedy matching.) Sub-expressions also match the longest possible substrings, subject to the constraint that the whole match be as long as possible. (Perl supports both greedy and reluctant modes!)

There is also a possessive mode, which is like greedy but will never backtrack even if the match fails: “.*X” with the string “WXYX” will match “WXYX” (greedy), “WX” (reluctant), and fail to match (possessive).

Update: The next version of POSIX/SUS (Issue 8) will include some enhancements to EREs:

The +, *, ?, and {min,max}, as noted above (rule 3), match the longest possible match (greedy). New operators to match the shortest possible match (including a null match) are being added (reluctant). The new ones are the same as the old ones with a “?” appended: +?, *?, ??, and {min,max}?.

In addition, a new flag will be available: REG_MINIMAL will mean switch rule 3 with shortest match (that is, switch to generous mode) for the regular operators. With this flag set, using the new question-mark forms turn back on greedy (longest) matching.

The greedy match of the beginning part of an RE may prevent the following part from matching anything. In this event, backtracking occurs, and a shorter match is tried for the first part. For example, the 5 parts of the RE xx*xx*x (matched against a long string of ‘x’es) will end up matching this as x|xxxxx|x||x.

Back reference (BREs or some non-POSIX dialects only) A ‘\’ followed by a non-zero decimal digit d matches the same sequence of characters matched by the dth parenthesized sub-expression (numbering sub-expressions by the positions of their opening parentheses, left to right). For example: “([bc])\1” matches “bb” or “cc” but not “bc”. For example: (((ab)c(de)f)) has \1=abcdef, \2=abcdef, \3=ab, \4=de

$ echo '12345' |sed

's/\([0-9]*\)\([0-9]*\)/|\1|\2|/'

|12345||

Example Regular Expressions

When using Gnu grep to view match results, note that all possible matches will be highlighted (in red), not just the one match that should be selected according to the rules of precedence. Can use -o option (with head -1), or use this instead: sed -e 's/RE/|&|/'.

abcdef Matches “abcdef”.

a*b Matches zero or more “a”s followed by a single “b”. For example, “b” or “aaaaab”.

a?b (ERE) Matches “b” or “ab”.

a+b+ (ERE) Matches one or more “a”s followed by one or more “b”s: “ab” is the shortest possible match; others are “aaaab” or “abbbbb” or “aaaaaabbbbbbb”.

.* and .+ These two both match all the characters in a string; however, the first matches every string (including the empty string), while the second (ERE) matches only strings containing at least one character.

^main.*(.*) (BRE) This matches a string starting with “main” followed by an opening and closing parenthesis with optional stuff between.

^# This matches a string beginning with “#”.

\\$ This matches a string ending with a single backslash. The regex contains two backslashes for escaping.

\$ This matches a single dollar sign, because it is escaped.

[a-zA-Z0-9] In the C locale, this matches any ASCII letters or digits.

[^ tab]+ (ERE) (Here tab stands for a single tab character.) This matches a string of one or more characters, none of which is a space or a tab. Usually this means a word.

^\(.*\)\n\1$ (BRE) This matches a string consisting of two equal substrings separated by a newline.

.{9}A$ (ERE) This matches any nine characters followed by an “A” at the end of the line.

^.{15}A (ERE) This matches the start of a string that contains 16 characters, the last of which is an “A”.

^$ Matches blank (empty) lines.

\(.*\)\1 (BRE) Matches any string that repeats, e.g., “abcabc” or “byebye”.

Example: Check if some ASCII file foo contains any control (non-printable) characters:

grep -q '[[:cntrl:]]' file && echo "yes" || echo "no"

(Note that tabs and carriage returns are control characters.)

Display any non-ASCII characters in a (text) file:

$ LC_ALL=C grep '[^[:print:]]' file

(Curly quotes match. Note you must use POSIX (=C) locale, or curly quote marks will be assumed to be printable text characters. Using sed to replace these is left as an exercise to the reader.)

A related question is to have a text file encoded in UTF-8, but with some invalid byte sequences (those that are not legal UTF-8). There is no easy answer for this, but Geoff Clare posted this answer in comp.unix.shell on 6/30/2016 “grepping for invalid UTF-8 characters”:

iconv -cs -f utf-8 -t utf-8 file \

| LC_ALL=C diff - file > file.diff

Example: Print a Unix configuration file, skipping comment and blank lines:

grep -Ev '^$|^#' file

or:

grep -Ev '^($|#)' file

A simple way to print all non-blank lines is:

grep . file

To print all lines longer than (say) 80 characters:

grep -E -n '.{81}' file

Determine if some argument to a shell script is an integer (number):

This is an interesting problem because it comes up a lot. Assuming you need to allow whole numbers with an optional leading plus or minus sign. Also assume you don’t need to support weird forms of integers such as 1e6 or 12. or 1,000,000 or 0x1A. Also assume you don’t care if -0 or +0 is considered a valid integer (you can modify the code if you do care).

To just test that ARG is all-digits is simpler (an empty string is not valid):

case ${1:+x} in *[!0-9]* false;; esac

There are a number of approaches for this but the most portable (and that doesn’t require a separate command or pipeline) is:

case $ARG in

"" | *[!0-9+-]* | ?*[-+]* | [-+]) echo no;;

*) echo yes;;

esac

(Case is discussed later.) You can use expr’s BRE matching operator:

LC_ALL=C \

expr X"$ARG" : 'X[+-]\{0,1\}[0-9]\{1,\}$' >/dev/null \

&& echo yes || echo no

Or this grep -E version:

LC_ALL=C printf '%s\n' "$ARG" | \

grep -Exqe '(-|\+)?[0-9]+' \

&& echo yes || echo no

or this awk version:

LC_ALL=C awk '

BEGIN { exit !(ARGV[1] ~ /^[-+]?[0-9]+$/) }' "$ARG" \

&& echo yes || echo no

Find only legal IPv4 addresses (“num.num.num.num”, where “num” is at least one digit, at most three digits including optional leading zeros, and in the range of 0..255):

Sounds like a great project idea to me!

Newlines and regular expressions don’t get along well. POSIX doesn’t support them from most standard shell utilities. C programs can use “multiline” matching; also some non-POSIX utilities (such as Perl and Java) support this, one way or another. Using awk:

$ echo 'ABC

DEF' | awk -v RS='' '/C\nD/'

ABC

DEF

Setting “RS” to null puts awk into multiline mode (blank lines separate records). Also awk ERE’s accept some extensions such as “\n” to mean a newline.

Email addresses as defined by RFC-822 and the newer RFC-5322 standards were not designed to be regexp-friendly. In some cases, only modern (non-POSIX) regular expression languages can handle them. Compare these: ex-parrot.com and stackoverflow.com. Keeping in mind the good-enough principle, here’s the much shorter one I use to validate the email addresses I use in my RSS feeds:

([a-zA-Z0-9_\-])([a-zA-Z0-9_\-\.]*)@(\[((25[0-5]|2[0-4][0-9]|1[0-9][0-9]|[1-9][0-9]|[0-9])\.){3}|((([a-zA-Z0-9\-]+)\.)+))([a-zA-Z]{2,}|(25[0-5]|2[0-4][0-9]|1[0-9][0-9]|[1-9][0-9]|[0-9])\])( *\([^\)]*\) *)*

Security Concerns with REs

Be aware that mixing untrusted data into a regular expression string has a security concern. Malicious (or just bad) users can slip special characters into their text, causing your RE to behave in unexpected and/or undesirable ways. A related concern is that Unicode text permits multiple representations of the same text, so a RE may or may not match a string depending on how it was encoded.

The secure solution is to first normalize any data (text or numbers) into a standard representation, then sanitize it by removing any illegal bytes (or any characters that have special meaning in a regular expression). (Even safer is to just check for them, and reject the data if any are present). You can also try to encode the data to make it safe (e.g., URL or percent encoding). Then validate the data (make sure it is legal for the intended use, for example as a URL, a file name, a user ID, a date, etc.)

For Unicode text, you can use the uconv utility from ICU. Normalization is achieved through transliteration option (“-x”):

$ uconv -x any-nfd <<<ä | xxd

00000000 61 cc 88 0a a...

00000004

$ uconv -x any-nfc <<<ä | xxd

00000000 c3 a4 0a ...

00000003

On Debian, Ubuntu and other derivatives, uconv is in the libicu-dev package. On Fedora, Red Hat and other derivatives, and in BSD ports, it’s in the icu package.

Python has unicodedata module in its standard library, which translates Unicode representations through the unicodedata.normalize() function:

$ python3 -c 'import unicodedata

print(unicodedata.normalize("NFC", "ääääää"))'

ääääää

“NFC” is the best form for general text, since it is more compatible with strings converted from legacy encodings. “NFKC” is the preferred form for identifiers, especially where there are security concerns.

(If you’re thinking this is a big hassle, you’re right. But, not doing this is the #1 cause of security breaches such as SQL injection attacks. So, learn to normalize, sanitize, and validate all data when it crosses a trust boundary into your code, and encode the data when it leaves your code.)

Lecture 6 — Common Filter Commands

Review: Filter commands process input (usually) a line at a time. They read from stdin (unless files are listed on the command line) and send the processed output to stdout. A “-” for a filename (usually) indicates to read/write stdin/stdout.

sed 'script' file ...

Sed is the stream editor. It doesn’t change files; it reads input a line at a time (either from stdin, or from listed files, one after the next), into a buffer known as the pattern space, and removes the trailing newline. Then it applies all the sed commands in the script, in order, for each command whose address matches the current line. (Multiple scripts can be specified, using -e.) Finally, the (modified) pattern space is written to stdout, plus a newline if one was removed earlier. (The -n option suppresses this default output.)

When you need to change the text in lines, possibly from a specific range of lines, no tool is as useful as sed. Sed also supports a wide range of operations, but these are less often used. (All will be explained below, as you may need to read a sed script someday.)

Quotes are used to protect the script from shell expansions. Sed also maintains a clipboard, called the hold space.

If the

script contains more than one sed command, separate them

with “;”. You can use multiple scripts too:

sed -e 'script' -e

'script' ... files...

You can put the script in a file, and use sed -f script-file files...

Modern (2013) sed has an -i option to edit a file in place. A copy of the original is made for safety. (See also the non-standard -c option.)

The current (2012) POSIX solution to editing files “in place” is to use a file editor. The ed editor can be scripted and has a similar (but simpler) syntax to sed. It also supports extended addressing modes, for example “.,.+4” to mean the current line and the following four lines.

We won’t cover ed in this course, but after learning sed, the man page should be sufficient. However, here is an example of editing a file in place, saving the current version as file.bak:

printf '%s\n' 'w file.bak' ',s/foo/bar/g' \

'another ed command' ... \

w | ed -s file

Using modern sed and “-i” is probably simpler; It is marked for inclusion in the next issue of POSIX (along with EREs).

Addresses All sed commands take zero addresses, one address, or two addresses (a range). Each address refers to a line, either as a line number, /RE/ that matches the line, or a $ (matches the last line). Gnu extensions: “num~step” (matches num-th line and each step-th line thereafter), “num,+N” (the num-th line and the N lines following.)

Some commands can take either 0 or 1 address. Any sed command with zero addresses applies to all lines.

A command with one address will be applied to all lines that match that address (if the address is a line number that will be at most a single line (none if the file has no such line) but if a RE than all lines that match.

A

command with two addresses separated

with a comma has an address range. In a range, the line which

matched the first address will always be accepted, even if the second selects

an earlier line (such as “3,2”). If the second

address is a RE, it will not be tested against the line that matched the first

address. Note a range may match multiple blocks of lines; starting with the

line after the second address matches, sed goes back

to looking for lines that match the first address. (Naturally this only

applies if the addresses are REs.) Demo:

$

sed -n '/START/,/END/p' file1.dat

The address(es) may be followed with “!” to select all lines not matched.

The REs may be /RE/ (any embedded slashed must be escaped with a backslash). If the RE has many slashes, you can make it more readable with \cREc, where c can be any character. (For REs used as addresses; for REs used with the “s” function, no backslash is needed or allowed.) /RE/I = case-insensitive (Gnu extension).

Sed supports basic REs, or BREs (Gnu allows extended REs, or EREs, with the right option).

The POSIX committee in 1/2012 approved the addition of the option “-E” to cause sed to use EREs. That won’t be in the standard until the next version, but feel free to use the Gnu “-r” option for that in the meantime.

Sed commands may be grouped, so you don’t have to enter the same address over and over:

addresses {

cmd1

cmd2

...

}

The grouped commands may also have addresses, and you can nest the grouping. Grouping allows for Boolean logic with the addresses. For example, to print all lines containing foo AND that don’t contain bar:

sed -n '/foo/ { /bar/! p; }'

Sed commands can be preceded with spaces, and spaces are allowed between the address (range) and the function letter. Multiple commands can be separated with semicolons. Commands can be followed by comments, which start with “#” to the end of the line (or end of the script).

Some useful sed functions (must know only the first three for our course):

s/RE/replacement/flags

This is probably the most useful sed command. The replacement text can use \# (back-references) and/or an un-escaped “&” to refer to the whole match. Any character except newline or backslash can be used instead of the slash. Whichever is used, you can include it literally by escaping it with backslash.

flags can include: g for global (all changes), n for the n-th occurrence only, p to print the modified line, w filename to append to filename, i for case-insensitive matching (in SUS issue 8). Using ng changes all occurrences from the nth one to the end. (This is a Gnu extension. For example, to change all but the first occurrence of foo to bar, use “s/foo/bar/2g”.)

Gnu sed also has useful extensions for the replacement text: \Ltext\E to force lowercase, \U for uppercase. (text will usually use a back-reference or &.) It also allows common C escapes such as \t. (Not part of POSIX; on many systems (Solaris, HP-UX), those escapes are not allowed.)

d delete the pattern space and read in the next line.

p print the pattern space. (Useful with -n option to suppress auto-printing.)

l print showing invisible chars in a visible form.

# A no-op command; used to insert comments

a \ <newline> text to append after current line <newline>. (Embedded newlines must be escaped with a backslash).

i \ <newline> text to insert before current line <newline>.

N Append the next line of input to the current pattern space (seperated with a newline). If there is no next line, terminate the script.

c \ <newline> text to replace current line <newline>.

q quit sed.

n Skip any remaining sed commands in the script and start the next cycle.

w filename Save to filename.

h copy pattern space to hold space.

H append pattern space to hold space.

g paste (replace the pattern space with the hold space).

G append a newline and then the hold space, to the pattern space.

x swap the pattern and hold spaces.

There are other sed functions that allow for some powerful filters. For example:

sed ':a;N;$!ba;some_command '

The part up to the last semicolon is a loop that reads all the lines, appending them to the buffer. Then some_command (e.g., some s/// command) can operate on the whole file of data, which includes embedded newlines. This is a powerful technique, if you have sufficient memory. Check the man page for all the commands available.

Examples:

To

display file with all characters visible (See also od, less -r, and cat -A):

sed -n

'l' file

To remove

leading/trailing space, squeeze runs to a single space (“⌂”=space):

sed -e 's/^⌂*//;s/⌂*$//;s/⌂⌂*/⌂/g'

Print

the body of an email message (from a file):

sed

'1,/^$/d' file

To remove the first and last lines:

sed '1d;$d' file

To insert a zero before the first string of exactly two (and no more) numerals, which might occur anywhere in a line? (Real-world problem; see zero-pad.sed.)

sed 's/.*/.&./;

s/\([^0-9]\)\([0-9]\{2\}[^0-9]\)/\10\2/;

s/^.\(.*\).$/\1/'

To move comments from the end of a line, to a new line above the command, in a shell script:

sed 's/\([^#]*\) *\(#.*\)/\2\

\1/'

To

extract the CVS module name from an email header; a sample header is

Subject:

YborStudent CVS Repository commit by

wpollock: "cvsproj/src ,Foo.java,1.2"

“cvsproj” is the module name in this example:

MODULE=$(sed -n \

'/^Subject:/{N; s,[^"]*"\([^/ ]*\)[/ ].*$,\1,p}')

What, if anything, is the difference between these two sed commands?

sed -n '/foo/p; /bar/p'

sed '/foo/b; /bar/b; d'

(Note, ‘b’ means to goto (branch to) the end of the script.)

Other filter commands

cut: -c cols cuts the columns specified; ex: 1,2,3 or 1-3, or 20- (to EOL). You can also use delimited fields, using -dchar to say char is the delimiter, and then -f fields instead of -c cols. (If the fields are separated by runs of char (usually space), you can use tr -s ' ' to replace each run with a single space.) (Less useful, but worth mentioning, is the colrm utility.)

Task: display the

last X characters from every line:

rev file | cut -c 1-X | rev

paste: concatenates files of columns, by default using TAB as delimiter (change with ‑dchar). You can also merge groups of lines of a single file to a single line with the -s option, useful for reformatting some files. Also, if some command outputs multiline records, you can use paste to make each record a single line, which can then be piped into other filter commands such as grep or awk. For example, suppose cmd produced records of three lines each. You could do the following to process each record with some awk script, and then split the record back into three lines:

cmd | paste - - - | awk '...' | tr '\t' '\n'

One trick is to get multi-column output from commands using paste. This gives three-column output: